Guests

- Jody Williamswinner of the 1997 Nobel Peace Prize for her work with the International Campaign to Ban Landmines. She is the chair of the Nobel Women’s Initiative.

- Stephen Goosedirector of Human Rights Watch’s Arms Division.

Nobel Peace laureate Jody Williams is joining with Human Rights Watch to oppose the creation of killer robots — fully autonomous weapons that could select and engage targets without human intervention. In a new report, HRW warns such weapons would undermine the safety of civilians in armed conflict, violate international humanitarian law, and blur the lines of accountability for war crimes. Fully autonomous weapons do not exist yet, but high-tech militaries are moving in that direction, with the United States taking the lead. Williams, winner of the 1997 Nobel Peace Prize for her work with the International Campaign to Ban Landmines, joins us along with Steve Goose, director of Human Rights Watch’s Arms Division. [includes rush transcript]

Transcript

AMY GOODMAN: Killer robots? It sounds like science fiction, but a new report says fully autonomous weapons are possibly the next frontier of modern warfare. The report released Monday by Human Rights Watch and Harvard Law School is called “Losing Humanity: The Case Against Killer Robots.” According to the report, these weapons would undermine the safety of civilians in armed conflict, violate international humanitarian law, blur the lines of accountability for war crimes. Scholars, such as Noel Sharkey, professor of artificial intelligence and robotics at the University of Sheffield in England, also notes that robots cannot distinguish between civilians from combatants.

NOEL SHARKEY: There is nothing in artificial intelligence or robotics that could discriminate between a combatant and a civilian. It would be impossible to tell the difference between a little girl pointing an ice cream at a robot or someone pointing a rifle at it.

AMY GOODMAN: Fully autonomous weapons don’t exist yet, but high-tech militaries are moving in that direction, with the United States spearheading these efforts. Countries like China, Germany, Israel, South Korea, Russia and Britain are also experimenting with the technology.

Human Rights Watch and Harvard Law School’s International Human Rights Clinic are calling for an international treaty preemptively banning fully autonomous weapons. They’re also calling for individual nations to pass laws to prevent development, production and use of such weapons at the domestic level.

For more, we’re joined by two guests in Washington: Steve Goose, director of Human Rights Watch’s Arms Division, which released the new report on killer robots, and Jody Williams, the Nobel Peace Prize winner who won the Peace Prize in 1997 for her work with the International Campaign to Ban Landmines. She’s also chair of the Nobel Women’s Initiative. Her forthcoming book is called My Name is Jody Williams: A Vermont Girl’s Winding Path to the Nobel Peace Prize.

Steve Goose and Jody Williams, we welcome you to Democracy Now! Jody, just describe what these weapons are. Killer robots—what do you mean?

JODY WILLIAMS: Killer robots are—when I first say that to people, they automatically think drones. And killer robots are quite different, in that there is no man in the loop. As we know with drones, a human being has to fire on the target. A drone is actually a precursor to killer robots, which are weapon systems—will be weapon systems that can kill on their own with no human in the loop. It’s really terrifying to contemplate.

AMY GOODMAN: Steve Goose, lay them out for us. And who is developing them?

STEVE GOOSE: Yeah, as Jody says, the frightening thing about this is that the robots themselves, the armed robots, will be making the decisions about what to target and when to pull the trigger. It’s just a very frightening development. The U.S. is at the lead on this in terms of research and development. A number of other countries have precursors and are pursuing this. Germany, Israel, South Korea, surely Russia and China, the United Kingdom, all have been doing work on this issue. It is for the future. Most roboticists think it will take at least 10, maybe 20, 30 years before these things might come online, although others think that more crude versions could be available in just a number of years.

AMY GOODMAN: I want to go to a clip from a video created by Samsung Techwin Company, which talks about weapons of the future.

SAMSUNG TECHWIN VIDEO: Samsung’s intelligence, surveillance and security system, with the capability of detecting and tracking, as well as suppression, is designed to replace human-oriented guards, overcoming their limitations.

AMY GOODMAN: Steve Goose, explain.

STEVE GOOSE: Well, this is—I mean, the system that they’re talking about is not yet fully autonomous, although it could become a fully autonomous system. For that particular one, you still have the potential for a human to override the decision of the robotic system. Even there, there could be problems, because the chance that a human will actually override a machine’s decision is unlikely. But this is the kind of system that we’re looking at that could become fully autonomous, where you take the human completely out of the picture. And they’re programed in advance, but they can’t react to the variables that require human judgment.

AMY GOODMAN: Who is driving this? Who benefits from killer robots, as you call them?

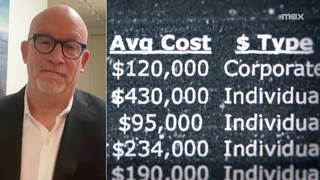

STEVE GOOSE: Well, no doubt there’s a lot of money to be made in these developments. And research labs for the militaries, as well as universities and private companies, are all engaged so far. We know that billions are going into development of autonomous weapons systems, some fully autonomous, some semi-autonomous. Even drones even fall under that category. But, you know, there’s—certainly, there are going to be people in the military who see this as a great advantage, because you’re taking the human off the battlefield, so you are reducing military casualties. The problem there is that you’re putting civilians at greater risk in shifting the burden of war from the military, who’s trained to do this, to civilians.

AMY GOODMAN: I want to turn to Tom Standage, the digital editor at The Economist. He points out there might be a moral argument for robot soldiers, as you’ve pointed out, Steve.

TOM STANDAGE: If you could build robot soldiers, you’d be able to program them not to commit war crimes, not to commit rape, not to burn down villages. They wouldn’t be susceptible to human emotions like anger or excitement in the heat of combat. So, you might actually want to entrust those sorts of decisions to machines rather than to humans.

AMY GOODMAN: Let’s put that to Jody Williams.

JODY WILLIAMS: When you just had Noel Sharkey, you quoted him, and Noel, who is a roboticist himself, talks about the ridiculousness of considering that robotic weapons can make those kinds of decisions. He contends it is—it is a simple yes-no by a machine.

I think another part of the emotion that people don’t think about when they want to promote developing robots is that robots cannot feel compassion or empathy. Oftentimes in war, a soldier will factor in other circumstances in making a decision whether or not to kill. A robot—it’s very unlikely they would be able to do that.

Another point, though, for me, is, if we proceed to the point of having fully autonomous killer robots that can decide who, what, where, when, how to kill, we are putting humans at—technology will no longer be serving humans. Humans will be serving technology. And when American soldiers will not have to face death on the battlefield, how much easier will it be for this country to go to war, when we already go to war so easily? It really frightens me.

AMY GOODMAN: Jody, you won the 1997 Nobel Peace Prize for your work around landmines. You headed up the International Campaign to Ban Landmines. Do you see a trajectory here in this, what, 15 years?

JODY WILLIAMS: In terms of getting rid of weapons or in terms of the development—

AMY GOODMAN: In terms of the development of weapons and also the movement that resists it.

JODY WILLIAMS: Yes, I do. It’s obvious that weapons are going to continue to be researched and developed, unless there are organizations like Human Rights Watch, like the Nobel Women’s Initiative and others around the world who are willing to come together and try to stop them. We—we, who are starting to speak out against killer robots, envision a very similar campaign to the campaign that banned, you know, landmines, which, by the way, also received the Peace Prize in 1997. We are already working to bring together organizations to create a steering committee that would bring together a campaign to stop killer robots and strategize at the national, regional and international levels with partner governments, just like we did with landmines, and then Steve Goose, Human Rights Watch and other organizations did with their successful bid to ban cluster munitions in 2008.

AMY GOODMAN: Certainly the U.S. is on the forefront of robotic weapons. I mean, certainly drones fit into that category. We’re only beginning to see the movement as people lay their bodies at—on the line at Creech and Hanford, upstate New York, the places where the drones are controlled from. But what about the U.S.’s role in all of this? Let me put that question to Steve Goose.

STEVE GOOSE: Yeah. I mean, you raised drones again, and Human Rights Watch has criticized how drones have been used by the Obama administration, criticized it quite extensively. But we draw a distinction here. We think of the fully autonomous weapons, the killer robots, as being beyond drones. With drones, you do have a man in the loop who makes the decisions about what to target and when to fire. But with fully autonomous weapons, that is going to change. It’s going to be the robot itself that makes those determinations, which makes it—it’s the nature of the weapon, which is not really the main problem with drones.

The United States—we’re getting mixed signals. Clearly, the U.S. is putting a lot of money into this and a lot of the DOD’s, the military’s planning documents show that there are a great many who believe that this is the future of warfare, that they envision moving ever more into the autonomous region and that fully autonomous weapons are both desirable and the ultimate goal.

AMY GOODMAN: But explain how a robot makes this decision.

STEVE GOOSE: It has to be programmed. It will be programmed in advance, and they will give it sensors to detect various things and give it, you know, an algorithm of choices to make. But it won’t be able to have the kind of human judgment that we think is necessary to comply with international humanitarian law, where you have to be able to determine, in a changing, complex battlefield situation, whether the military advantage might exceed the potential cost to civilians, the harm to civilians. You have to be a to make the distinction between even combatants and civilians. A simple thing like that could be very difficult in a murky battlefield. So, we don’t think that—

AMY GOODMAN: Steve Goose, what about hacking?

STEVE GOOSE: Hacking can be a problem. I mean, the thing is, even if a killer robot sustains injuries on the battlefield, that might affect how it would be able to respond properly. Or there could be countermeasures that are put forward by the enemy to do this, as well.

Media Options