Topics

Guests

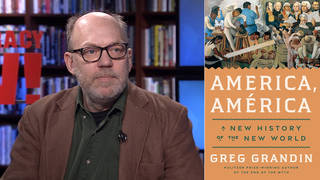

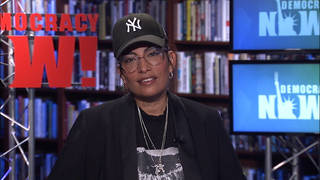

- Joy Buolamwinicomputer scientist, researcher at the MIT Media Lab and founder of the Algorithmic Justice League.

The controversy over police use of facial recognition technology has accelerated after a Black man in Michigan revealed he was wrongfully arrested because of the technology. Detroit police handcuffed Robert Williams in front of his wife and daughters after facial recognition software falsely identified him as a suspect in a robbery. Researchers say facial recognition software is up to 100 times more likely to misidentify people of color than white people. This week, Boston voted to end its use in the city, and Democratic lawmakers introduced a similar measure for federal law enforcement. “This is not an example of one bad algorithm. Just like instances of police brutality, it is a glimpse of how systemic racism can be embedded into AI systems like those that power facial recognition technologies,” says Joy Buolamwini, founder of the Algorithmic Justice League.

Transcript

AMY GOODMAN: This is Democracy Now! I’m Amy Goodman, as we turn to the growing controversy over police use of facial recognition technology after the first known case of police wrongfully arresting someone in the United States based on the technology, though critics say there are likely many more cases that remain unknown.

It was January 9th when Detroit police wrongfully arrested Robert Williams, an African American man, in front of his own home, based on a false facial recognition hit. His wife and daughters, ages 2 and 5, watched him get arrested. This is Williams and his cousin Robin Taylor describing the ordeal in an ACLU video.

ROBERT WILLIAMS: I was completely shocked and stunned to be arrested in broad daylight in front of my daughter, in front of my wife, in front of my neighbors. It was — it was — it was — it was — I don’t even — I can’t really put it into words. It was — it was one of the most shocking things I ever had happen to me.

ROBIN TAYLOR: He could have been killed if he would have resisted. You know, being a Black man being arrested or having any encounter with the police, it could go badly. And, you know, that is something that we worry about in the Black family when someone has an encounter with the police.

AMY GOODMAN: After Detroit police arrested Robert Williams, they held him overnight in a filthy cell. His fingerprints, a DNA sample, mugshot were put on file. This is Williams describing how the officers interrogated him based on the false facial recognition hit.

ROBERT WILLIAMS: So, when we get to the interview room, the first thing they had me do was read my rights to myself and then sign off that I read and understand my rights. A detective turns over a picture of a guy and says — I know, and he’s like, “So, that’s not you?” I look. I said, “No, that’s not me.” He turns another paper over. He says, “I guess this is not you, either.” I pick that paper up and hold it next to my face, and I said, “This is not me.” I was like, “I hope you all don’t think all Black people look alike.” And then he says, “The computer says it’s you.”

AMY GOODMAN: But the next day, police told Williams, quote, “The computer must have gotten it wrong,” and he was finally released — nearly 30 hours after his arrest.

This comes as Democratic Senators Ed Markey and Jeff Merkley introduced a bill Thursday that would ban the use of facial recognition and other biometric surveillance by federal law enforcement agencies. On Wednesday, Boston City Council voted unanimously to ban its use in Boston. Earlier this month, Amazon placed a one-year moratorium on letting police use its facial recognition technology. And IBM has announced it’s pulling out of the facial recognition business entirely.

Well, our next guest is a computer scientist and coding expert who documents racial bias in this type of technology. Joy Buolamwini is a researcher at the MIT Media Lab, founder of the Algorithmic Justice League. She’s also featured in the documentary Coded Bias.

Welcome back to Democracy Now! It’s great to have you with us again, Joy. It was great to interview you first at the Sundance Film Festival.

But, Joy, talk about this case. Talk about the Williams case and its significance, how it happened. What’s the technology? And what’s happening with it now?

JOY BUOLAMWINI: Yes. So, the thing we must keep in mind about Robert Williams’ case is this is not an example of one bad algorithm. Just like instances of police brutality, it is a glimpse of how systemic racism can be embedded into AI systems like those that power facial recognition technologies. It’s also a reflection of what study after study after study has been showing, studies I’ve conducted at MIT with Dr. Timnit Gebru, Deb Raji, studies from the National Institute for Standards and Technology, showing that on 189 algorithms — right? — you had a situation where Asian and African American faces were 10 to 100 times more likely to be misidentified than white faces. You have a study, February 2019, looking at skin type, showing that darker-skinned individuals were more likely to be misidentified by these technologies. So it is not a shock that we are seeing what happened to Robert Williams.

What we have to keep in mind is this is a known case. And we don’t know how many others didn’t have a situation where the police or the detective says, “Oh, the computer must have got it wrong.” And this is an important thing to keep in mind. Oftentimes, even if there’s evidence in front of you — this man does not look like the picture — there is this reliance on the machine. And when you have a situation of confirmation bias, particularly when Black people are presumed to already be guilty, this only adds to it.

Now, the other thing I want to point out is, you can be misidentified even if you’re not where a crime happened. So, in April 2019, you actually had Ms. Majeed. She was a Brown University senior who was misidentified as a terrorist suspect in the Sri Lanka Easter bombings. She wasn’t in Sri Lanka. In the movie Coded Bias, the filmmaker shows a 14-year-old boy being stopped by police in the U.K. because of a misidentification. So, again, this is not an example of one bad algorithm gone wrong, but it is showing, again, that systemic racism can become systematic when we use automated tools in the context of policing.

AMY GOODMAN: Joy, let me play clip from the documentary Coded Bias that shows that story, police in London stopping a young Black teen based on surveillance.

SILKIE: Tell me what’s happening.

GRIFF FERRIS: This young Black kid’s in school uniform, got stopped as a result of a match. Took him down that street just to one side and like very thoroughly searched him. It was all plainclothes officers, as well. It was four plainclothes officers who stopped him. Fingerprinted him after about like maybe 10, 15 minutes of searching and checking his details and fingerprinting him. And they came back and said it’s not him.

Excuse me. I work for a human rights campaigning organization. We’re campaigning against facial recognition technology. We’re campaigning against facial — we’re called Big Brother Watch. We’re a human rights campaigning organization. We’re campaigning against this technology here today. And then you’ve just been stopped because of that. They misidentified you. And these are our details here.

He was a bit shaken. His friends were there. They couldn’t believe what had happened to them.

Yeah, yeah. You’ve been misidentified by their systems And they’ve stopped you and used that as justification to stop and search you.

But this is an innocent, young 14-year-old child who’s been stopped by the police as a result of a facial recognition misidentification.

AMY GOODMAN: So, that is an excerpt from Coded Bias, that premiered at the Sundance Film Festival. But, Joy, tell us more. He was 14 years old.

JOY BUOLAMWINI: Yes. And him being 14 years old is also important, because we are seeing more companies pushing to put facial recognition technologies in schools. And we also continuously have studies that show these systems also struggle on youthful faces, as well as elderly faces, as well as on race and on gender and so many other factors.

But I want to also point out that while we are showing examples of misidentifications, there’s the other side. If these technologies are made more accurate — right? — it doesn’t then say accurate systems cannot be abused. So, when you have more accurate systems, it also increases the potential for surveillance being weaponized against communities of color, Black and Brown communities, as we’ve seen in the past. So, even if you got this technology to have better performance, it doesn’t take away the threat from civil liberties. It doesn’t take away the threat from privacy. So the face could very well be the final frontier of privacy, and it can be the key to erasing our civil liberties, right? The ability to go out and protest, you have chilling effects when you know Big Brother is watching. Oftentimes there is no due process. In this case, because the detective said, “Oh, the computer must have gotten it wrong,” this is why we got to this scenario. But oftentimes people don’t even know these technologies are being used.

And it’s not just for identifying someone’s unique individual. You have a company called HireVue that claims to analyze videos of candidates for a job and take verbal and nonverbal cues trained on current top performers. And so, here you could be denied economic opportunity, access to a job, because of failures of these technologies.

So, we absolutely have to keep in mind that there are issues and threats when it doesn’t work, and there are issues and threats when it does work. And right now when we’re thinking about facial recognition technologies, it’s a high-stakes pattern recognition game, which equates it to gambling. We’re gambling with people’s faces. We’re gambling with people’s lives. And ultimately, we’re gambling with democracy.

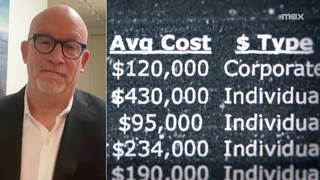

AMY GOODMAN: So, talk about the agencies that you understand are using this. I mean, you’ve mentioned this in your writing — Drug Enforcement Administration, DEA; Customs and Border [Protection], CBP; ICE. Explain how they are using them.

JOY BUOLAMWINI: Right. And in addition to that, you also have TSA. So, right now we have a Wild Wild West where vendors can supply government agencies with these technologies. You might have heard of the Clearview AI case, where you scrape 3 billion photos from the internet, and now you’re approaching government agencies, intelligence agencies with these technologies, so they can be used to have investigative leads — right? — or they can be used to interrogate people. So, it’s not a situation where there is transparency about the scope and breadth of its use, which is another situation where we think about due process, we think about consent, and we think about what are the threats of surveillance.

AMY GOODMAN: So, Joy, you have written, “We Must Fight [Face] Surveillance to Protect Black Lives.” If you can talk about the calls of the Black Lives Matter movement, people in the streets to defund police departments, to dismantle police departments? How does facial recognition technology fit into this?

JOY BUOLAMWINI: Absolutely. So, when we talk about defunding the police, what we have to keep in mind is, when resources are scarce, technology is viewed as the answer to save money, to be more efficient. And what we’re seeing are the technologies that can come into play — right? — can become highly optimized tools for oppression and suppression. So, as we’re thinking about how we defund the police, how we shift funds to actually uplift communities, invest in healthcare, invest in economic opportunities, invest in educational opportunities, we have to also understand that as a divestment from surveillance technologies, as well.

AMY GOODMAN: Talk about the recent announcements by IBM, Amazon, Microsoft, saying they’ll pause or end sales of their facial recognition technology. Do you think what they’re saying is enough? What exactly are they doing?

JOY BUOLAMWINI: So, IBM first came out to say that they will no longer sell facial recognition technology, which is the strongest position of all of these companies. It then prompted Microsoft to come out, as well as Amazon.

And what I want to emphasize is, they didn’t do this out of the kindness of their hearts, right? It took the cold-blooded murder of George Floyd, protest in the streets, years of organizing by civil liberties and rights organizations, activists and researchers to get to this point.

So, it is welcome news that you have household name companies stepping back from facial recognition technologies, because it underscores its dangers and its harms, but there are so many other companies that are big players in the space. We have to talk about NEC. We have to talk about Cognitech. We have to talk about Rank One. And so, all of this shows that we must think about this as an ecosystem problem. So, yes, it’s welcome news that we have three household tech giants stepping away from it, and it also elevates the conversation, but it cannot end there. And ultimately, we can’t rely on businesses to self-regulate, or think that businesses are going to put the public interest above the business interest. That is up to our lawmakers. And so, this is the moment to step up and enact laws.

AMY GOODMAN: So, talk about those laws, Joy. You have the Boston City Council — that’s where you are. You have the Boston City Council that — did they unanimously vote to not allow facial recognition technology? What does that mean? And now you have Congress, you have the Senate, just Thursday, introducing a facial recognition bill. And how much does that ban the use of federal law enforcement in using it?

JOY BUOLAMWINI: Absolutely. So, we did have a unanimous vote in Boston, 13 to 0, to ban government use of face surveillance technology in the city. I was happy to lend my expertise at a hearing, alongside many others calling for a ban, including 7-year-old Angel, who talked about not wanting this kind of technology when she’s going to school.

And so, what you’re seeing, not just in Boston — right? — second-largest state, but across the U.S., coast to coast — we saw the ban in San Francisco. We’ve seen Berkeley. We’ve seen Oakland. We’ve seen Brookline. We’ve seen Cambridge. We’ve also seen the state of California say no to facial recognition on bodycams. So, all of this shows that we have a voice, we have a choice. People are standing up. They’re resisting suppressive technology.

And also what this shows is, even if one city or one state makes a choice, we still have the rest of the American people to consider. So this is why I was extremely happy to see the recent act that was announced this Thursday for the Facial Recognition and Biometric Technology Moratorium Act, because now we can say, “Let’s at least provide base-level protections for all of the United States.” This is the strongest bill that has come out of Congress yet.

AMY GOODMAN: And finally, masks. How do they throw a wrench into the works? I mean, people wearing masks now to protect themselves and to protect the community — oh, all except the president of the United States, who doesn’t wear the mask. But, you know, how does it affect [facial] technology? We know that a lot of these protests all over the country have been filmed. Drones have been monitoring them. But what does it mean when people are wearing masks?

JOY BUOLAMWINI: It means that the technology becomes even less reliable, because to make a match, you want as much facial information as possible. So if I cover any component of my face, that is less information, so you’re likely to have more misidentifications. What happened to Robert Williams could become even worse. But it’s also normalizing having your face covered, right? And so, if more people cover their face or resist these technologies, it will also be harder to deploy. But masks make it even more likely that you will have misidentifications.

AMY GOODMAN: I want to thank you so much, Joy Buolamwini, for joining us, researcher at the MIT Media Lab, founder of the Algorithmic Justice League, featured in the documentary Coded Bias. To see our extended interview with her, you can go to democracynow.org.

When we come back, we look at how Johnson & Johnson has been ordered to pay more than $2 billion to a group of women who developed ovarian cancer after using Johnson & Johnson talcum powder, which is contaminated with asbestos. Johnson & Johnson heavily marketed the powder to African American women. Stay with us.

Media Options