Topics

Guests

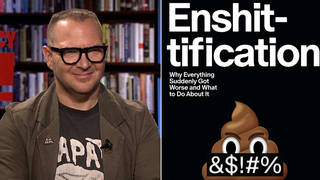

- Ramesh Srinivasanprofessor of information studies at the University of California, Los Angeles, where he also directs the Digital Cultures Lab.

Thousands of internal Facebook documents leaked to media outlets continue to produce damning revelations about how the social media giant has prioritized its profits over user safety. The Facebook Papers have provided fresh evidence of how the company has let serious problems fester on its platform, including hate, misinformation, and human trafficking, and failed to invest in moderation outside English-speaking countries. The former Facebook product manager who shared the documents, Frances Haugen, is pressing lawmakers to more tightly regulate the company’s activities and testified Monday before the British Parliament ahead of scheduled meetings with officials in France, Germany and the European Union. Facebook CEO Mark Zuckerberg says the leaked documents paint a “false picture” based on cherry-picked evidence, but we speak with UCLA information studies professor Ramesh Srinivasan, who says they confirm what many critics have warned about for years. “This new form of digital capitalism that I believe Facebook is trailblazing is one that is playing with our intimate emotions on every single level.”

Transcript

AMY GOODMAN: Thousands of pages of internal documents leaked by former Facebook product manager turned whistleblower Frances Haugen are now the basis of a damning series of reports called the Facebook Papers being published this week. They show how the company’s choices prioritized profits over safety and how it tried to hide its own research from investors and the public. A new story published this morning by the Associated Press finds the Facebook documents show the platform ignored some of its own researchers’ suggestions for addressing vaccine misinformation. Facebook Chief Executive Mark Zuckerberg pushed back Monday during an earnings call with investors, calling the reports published, quote, “coordinated efforts to selectively use leaked documents to paint a false picture of our company,” unquote.

But fallout from the Facebook Papers continues to generate political support for increased regulation. After testifying before Congress earlier this month, on Monday Haugen testified for more than two hours before the British Parliament as the United Kingdom and the European Union are both planning to introduce new digital and consumer protection measures. She spoke about the social harm generated when Facebook’s platform is used to spread hate speech and incite violence without adequate content moderation in local languages, such as in Ethiopia, which is now engulfed by a civil war.

FRANCES HAUGEN: I think it is a grave danger to democracy and societies around the world to omit societal harm. To give like a core part of why I came forward was I looked at the consequences of choices Facebook was making, and I looked at things like the Global South, and I believe situations like Ethiopia are just part of the opening chapters of a novel that is going to be horrific to read. Right? We have to care about societal harm, not just for the Global South but our own societies, because, like I said before, when an oil spill happens, it doesn’t make it harder for us to regulate oil companies. But right now Facebook is closing the door on us being able to act. Like, we have a slight window of time to regain people control over AI. We have to take advantage of this moment.

AMY GOODMAN: Frances Haugen’s testimony comes as the news outlet The Verge reports Facebook plans to change its company name this week to reflect its transition from social media company to being a, quote, “metaverse” company. Facebook has already announced plans to hire thousands of engineers to work in Europe on the metaverse. In the coming weeks, Haugen is scheduled to meet with officials in France, Germany and the European Union.

For more, we’re joined by Ramesh Srinivasan, professor of information studies at the University of California, Los Angeles, UCLA, where he also directs the Digital Cultures Lab.

Welcome back to Democracy Now! It’s great to have you with us, Professor. Can you talk about the significance of what has been released so far in the Facebook Papers?

RAMESH SRINIVASAN: I think that — it’s great to be with you, Amy, and great to join you this morning.

I think that what Frances Haugen has done is blow the whistle on Facebook’s complicity and its internal knowledge of a number of problematic issues that many of us were alleging that they were engaging with for several years at this time. What Facebook has essentially done, and she has exposed that they were aware of, is play with our emotions, play with our psychologies, play with our anxieties. Those are the raw materials that fuels Facebook’s attempts to be a digital empire. And more generally, this is an example of how this new form of digital capitalism that I believe Facebook is trailblazing is one that is playing with our intimate emotions on every single level.

So, these revelations are extremely important. Right now the timing is very, very important for action to be taken to rein Facebook in, but to also, more generally, understand that this is the general face of Big Tech that we’re seeing more and more exposed in front of us.

JUAN GONZÁLEZ: And, Professor, when Frances Haugen talks about greater people control over AI, what does that mean? What would that look like, and especially in view of the other issue that she raised, which is the disparate impact of these social media platforms on the Global South, on less developed countries, where Facebook is pouring far less resources into moderating its content?

RAMESH SRINIVASAN: Yeah, both are extremely important questions, Juan. I mean, first, to the point about people’s relationship to AI, we tend to talk about AI these days as if it’s some sort of leviathan, but what we’re actually talking about when we’re discussing AI are the mechanisms by which various types of technology companies — mind you, which are the wealthiest companies in the history of the world, all of which are leveraging our lives, our public lives, our lives as citizens, even the internet that we paid for — it’s basically the mechanism by which they’re constantly surveilling us, they’re constantly transacting our attention and our data, and they’re playing with our psychological systems — our psychology, actually — in multiple ways. They’re getting us into fight-or-flight mode to trigger and activate our attention and our arousal, to keep us locked in, and to therefore behaviorally manipulate us. They’re also feeding us into groups that are increasingly hateful and divisive. We saw that with January 6th, for example. And the mechanism, the means by which they’re able to do that is by playing again with our emotions, taking us to these groups so we can recognize, falsely, that we are not alone in having particular viewpoints, that there are others with more hardcore viewpoints.

So, now if you look at those mechanisms of manipulation, of behavioral manipulation, which are actually quite common across Big Tech, but very specifically egregious in the case of Facebook, now let’s look at that when it comes to the Global South. The so-called Global South — we’re talking about the African continent, South America, South Asia, Southeast Asia and so on — represent the vast majority of Facebook’s users, because here we’re talking about users not just of Facebook the technology, but also platforms like WhatsApp and Instagram, as well. Now, in many of these countries, there is not necessarily a strong independent media or a strong sort of journalistic press to rebut or to even actually provide some sort of countervisibility to all the hate and lies that Facebook prioritizes, because we know — and Frances Haugen has confirmed this — that Facebook’s algorithm prioritizes divisive, polarizing and hateful content because of algorithmic and computational predictions that it will capture our attention, it will lock in our sympathetic nervous system.

So, if we look at these other countries in the world, we can see consistently, including right now at this very moment, when it comes to the Tigray people of Ethiopia, how hateful content is being prioritized. Facebook’s algorithms tend to work very well with demagogue leaders who spew hateful content. And they tend to rebut the voices, as has always been the case over the last several years, of minorities, of Indigenous peoples, of Muslims and so on. So this has real-life effects in terms of fomenting actual violence against people who are the most vulnerable in our world. And so, this represents a profound — not negligence by Facebook, but they recognize they can get away with it. Simply hire a few exploited content moderators in places like the Philippines, who are encountering PTSD, and then, basically, you know, let the game play out in these countries in the world, which represent the vast majority, again, of Facebook’s users.

JUAN GONZÁLEZ: And what is the impact of all of this on the democratic process? Because, in reality, are we facing the possibility that through algorithms of companies like Facebook we are actually clearly subverting the very process of people being able to democratically choose leadership and the government policies as a result of their being inundated with misinformation and hate?

RAMESH SRINIVASAN: I mean, that is absolutely what’s occurring, because all of us — imagine if billions of us — and we’re talking about approximately 3.5 or so billion people around the world with some access and engagement with Facebook technologies, recognizing that in many parts of the world Facebook is actually the internet, and WhatsApp is actually the cellphone network. So, what this means is it’s actually — we are turning to Facebook to be our media company, our gateway to the world, and to be our space of democratic communication, when it is anything but that, because imagine 3.5 billion people, all with their own screens, that are actually locking — that are unlocking them into a world that is not the public sphere in any sense at all, but is actually based upon polarization, amplification of hate, and, more than anything, the sweet spot, the oil of the new economy, which is people’s addiction and their attention. And there’s nothing that gets our attention more than being put into fight or flight, getting outraged, getting angry, getting just sort of agonized. And that is exactly what Facebook is doing, because we’re all being presented with completely different realities algorithmically on our screen, and those realities are not based on some open sense of dialogue or compassion or tolerance, but based on algorithmic extremism, based on all of this data that’s being gathered about us, none of which we have any clue about.

AMY GOODMAN: So, let’s talk about examples. I mean, you’re talking about the privatization of the global commons, because this is where —

RAMESH SRINIVASAN: Precisely.

AMY GOODMAN: — so many people, even with the digital divide, communicate. I mean, you have — the U.S. is 9% of the global population of Facebook, you know, the global consumers, so 90% are outside the United States. But 90% of the protections or 90% of the resources going into dealing with the hate are in United States, Facebook is putting in the United States. And even here, look at what happens. Didn’t Facebook set up a young woman — made pretend they were a young woman profile on Facebook, who said they supported Trump; immediately — and this is Facebook, this is a fictional person — saw she was inundated with requests to join QAnon, with hate? And then we see what happened on January 6th. This is when Facebook has poured in all of the so-called protections. Talk about its relation to January 6th, and then talk globally, where they’re almost putting nothing in other languages — for example, in Vietnam.

RAMESH SRINIVASAN: That’s such an important example, Amy. Thank you for bringing it up. Basically, our mechanisms of sort of engaging with the wider world, even in our country, as you point out, even here in the United States, are all based upon routing us down these algorithmic, opaque rabbit holes that get us more and more extreme — right? — where content that is more extreme is often suggested to us. And it’s often, as you alluded to, through the medium of a Facebook group, right? So, any sort of group that has sort of hateful speech associated with it, you know, that expresses outrage, that will activate our emotions — because those are the raw materials of digital capitalism, our emotions and our anxieties and our feelings — that’s exactly where they want to take us, because then that basically suggests to us, Amy, that you’re not alone: There are other people with viewpoints not necessarily even just like your own, but even more amplified, more radical. So, that ends up, as we saw, generating great amounts of violence and this brutal insurrection.

So, Facebook likes to claim that they are just supporting free speech, you know, that there are some bad actors gaining their platform, when in fact their platform is designed for bad actors to leverage their platform, because they have a highly symbiotic relationship. As we’ve spoken about in the past here on Democracy Now!, the former president was perfectly symbiotic in his relationship with Facebook. They were very, very good bedfellows. And we see that with demagogues around the world. So, now when we want to talk about the Global South, we can recognize that Facebook can basically say, “Hey, you know, we’re just — you know, we have different languages on our platform. Sure, we are talking to a few people in countries like Ethiopia. We talk to a few people in Myanmar, and never mind that they were basically responsible in many ways in fomenting a genocide against the Rohingya. You know, we are talking to people in the Modi government in India, who has said many demagogic things against Muslim minorities,” and the Facebook Papers have revealed this, that Frances Haugen has brought out, as well. This actually works very well for them.

Here in the United States, where we have strong enough or strongish independent media, thanks to Democracy Now!, we are able to challenge Facebook in the public sphere to some extent. But in other countries in the world, that doesn’t necessarily exist to the same extent. And Facebook can basically say, “Hey, we can just leverage the lives, the daily lives, 24/7/365, of billions of you and basically do whatever we want.” And, you know, they don’t really have to do much about it. They don’t have to actually take really any real steps to resolve the harms that they’re causing to many people around the world.

JUAN GONZÁLEZ: And, Professor, you mentioned India, the second-largest population in the world. And the Facebook Papers revealed that Facebook had — some of its managers had done a test of an average young adult in India, who became quickly flooded with Hindu nationalist propaganda and anti-Muslim hate. And one of the staffers who was monitoring this account said, quote, “I’ve seen more images of dead people in the past 3 weeks than I’ve seen in my entire life total.” Could you talk about the impact of the lack of accountability of Facebook in terms of what its platform is doing in a country like India?

RAMESH SRINIVASAN: And, you know, that hits home personally for me as someone of South Indian descent. I have been also part of WhatsApp groups with various friends or relatives in India that tend to spiral into — you know, going from a stereotype, for example, of a Muslim minority or an Indigenous minority and then quickly amplifying. And I think, in a country like India, which is, you know, in a sense, the world’s largest democracy, we see major threats to that democracy aligned with the Hindu nationalist government and also with the growth of Hindu nationalism, which, of course, as has always been the case, vilifies and goes after the Muslim minority, which is just absurd and just makes my stomach churn.

And this is exactly — what you just laid out, Juan, is exactly how the Facebook playbook works. You create an account. You kind of browse around. I mean, we don’t really browse anymore these days, but you sort of befriend various people, you look at various pages, and you quickly get suggested content that takes you down extremist rabbit holes, because, we all know, sadly, when we see pictures of dead people, when we see car crashes, when we see fires, we pay attention, because it activates our sympathetic nervous system. Facebook recognizes the easiest way it can lock people in, so it can monetize and manipulate them, is by feeding them that type of content. And that’s what we’ve seen in India.

AMY GOODMAN: So, finally, we just have 30 seconds, Ramesh, but I wanted to ask you about — you have Haugen now testifying before the British Parliament, going through Europe, very significant because they’re much more likely to regulate, which could be —

RAMESH SRINIVASAN: Yeah.

AMY GOODMAN: — a model for the United States. Talk about the significance of this.

RAMESH SRINIVASAN: I think it’s fantastic that the European Union and the U.K. want to take aggressive measures. Here in the United States, we have Section 230, which is protecting some of the liability of companies like Facebook from spreading misinformation and hate. We need to actually shift to think about a rights space framework. You know, what are the rights we all have as people, as citizens, as peoples who are part of a democracy, in a digital world? We need to deal with the algorithms associated with Facebook. There should be audit and oversight and disclosure. And more than anything, people need their privacy to be protected, because we know, again and again, as we’ve discussed, vulnerable people get harmed. Real violence occurs against vulnerable peoples and polarizes and splinters our societies, if we don’t do anything to about this. So, let’s shift —

AMY GOODMAN: Should Facebook or Meta — perhaps that’s what it’s going to be called, the announcement on Thursday.

RAMESH SRINIVASAN: Right.

AMY GOODMAN: Should it simply be broken up, like in the past, a century ago, Big Oil?

RAMESH SRINIVASAN: I think we may want to consider that Facebook is a public utility, as well, and we should regulate it accordingly. But more than anything, we need to force them to give up power and put power in the hands of independent journalists, people in the human rights space, and, more than anything, treat all of us, who they are using, constantly using, as people who have sovereignty and rights. And they owe us true disclosure around what they know about us. And we should be opted out of surveillance capitalism as a default.

AMY GOODMAN: Ramesh Srinivasan, we want to thank you for being with us, professor of information studies at UCLA and Digital Cultures Lab director, author of the book Beyond the Valley: How Innovators Around the World Are Overcoming Inequality and Creating the Technologies of Tomorrow.

When we come back, we go to Sudan, where at least 10 protesters have been shot dead following a military coup. Back in 30 seconds.

Media Options