Guests

- Jessica Gonzálezco-CEO of the media advocacy organization Free Press.

- Roger McNameeauthor and early mentor to Facebook founder Mark Zuckerberg.

An unprecedented leak at Facebook reveals top executives at the company knew about major issues with the platform from their own research but kept the damning information hidden from the public. The leak shows Facebook deliberately ignored rampant disinformation, hate speech and political unrest in order to boost ad sales and is also implicated in child safety and human trafficking violations. Former Facebook product manager Frances Haugen leaked thousands of documents and revealed her identity as the whistleblower during an interview with “60 Minutes.” She is set to testify today before the Senate Commerce Subcommittee on Consumer Protection. “Their value system, which is about efficiency and speed and growth and profit and power, is in conflict with democracy,” says Roger McNamee, who was an early mentor to Mark Zuckerberg and author of “Zucked: Waking Up to the Facebook Catastrophe.” He says Facebook executives are prioritizing profits over safety. We also speak with Jessica González, co-CEO of the media advocacy organization Free Press and co-founder of Change the Terms, a coalition that works to disrupt online hate, who says this demonstrates Facebook is “unfit” to regulate itself. “We need Congress to step in.”

Transcript

AMY GOODMAN: We begin today’s show looking at a massive leak of tens of thousands of internal Facebook documents that show the social media’s own research indicates its algorithm helps boost disinformation, hate speech and political unrest around the world and that Facebook executives knew about it but kept the damning information hidden from the public. The leak also implicates Facebook in issues of child safety and human trafficking, while it prioritized profits over people’s welfare.

The documents were behind a sweeping investigation by The Wall Street Journal and were unveiled by whistleblower and former Facebook product manager Frances Haugen. She secretly copied the pages before leaving her job at the company’s Civic Integrity unit in May. Haugen spoke publicly for the first time Sunday on CBS’s 60 Minutes with reporter Scott Pelley.

SCOTT PELLEY: To quote from another one of the documents you brought out, “We have evidence from a variety of sources that hate speech, divisive political speech, and misinformation on Facebook and the family of apps are affecting societies around the world.”

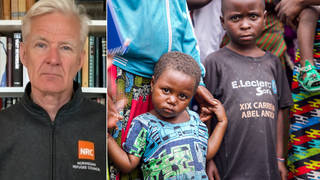

FRANCES HAUGEN: When we live in an information environment that is full of angry, hateful, polarizing content, it erodes our civic trust. It erodes our faith in each other. It erodes our ability to want to care for each other. The version of Facebook that exists today is tearing our societies apart and causing ethnic violence around the world.

AMY GOODMAN: Haugen has filed a federal complaint against Facebook and is testifying today before the Senate Commerce Subcommittee on Consumer Protection. According to her prepared remarks, she’ll call on lawmakers to take action against Facebook for the harm it poses to its users and the world. She is expected to say, quote, “When we realized tobacco companies were hiding the harms it caused, the government took action. When we figured out cars were safer with seat belts, the government took action. And today, the government is taking action against companies that hid evidence on opioids. I implore you to do the same here,” she said.

Today’s panel is also looking into how Facebook downplayed its knowledge that its photo- and video-sharing app Instagram is harmful for teen girls and tweens. This is Haugen again, speaking on CBS’s 60 Minutes to reporter Scott Pelley.

SCOTT PELLEY: One study says 13-and-a-half percent of teen girls say Instagram makes thoughts of suicide worse; 17% of teen girls say Instagram makes eating disorders worse.

FRANCES HAUGEN: And what’s super tragic is Facebook’s own research says, as these young women begin to consume this eating disorder content, they get more and more depressed, and it actually makes them use the app more. And so they end up in this feedback cycle where they hate their bodies more and more. Facebook’s own research says it is not just that Instagram is dangerous for teenagers, that it harms teenagers; it’s that it is distinctly worse than other forms of social media.

AMY GOODMAN: Lawmakers are also pursuing Facebook as part of a federal antitrust case and over its role in the January 6 Capitol insurrection. Documents leaked by Frances Haugen detail Facebook’s decision to dissolve its Civic Integrity unit after the 2020 election and before the assault on the Capitol. Her testimony comes a day after Facebook, Instagram and WhatsApp — also owned by Facebook — suffered a six-hour outage disrupting online communication worldwide. It’s the first time this has happened for so long.

For more, we’re joined in San Francisco by Roger McNamee. He was an early investor in Facebook. He was a Mark Zuckerberg mentor, then went on to write the book Zucked: Waking Up to the Facebook Catastrophe. And in Los Angeles, we’re joined by Jessica González. She is co-CEO of the media advocacy group Free Press and co-founder of Change the Terms, a coalition that works to disrupt online hate. She’s also a member of the Real Facebook Oversight Board.

We welcome you both to Democracy Now! Jessica, let’s begin with you. As you listen to this testimony of Frances Haugen and all of these documents that have been released, I mean, it was really amazing to see these congressional hearings in the last few weeks, where you have Facebook being confronted by senators, and the senators are saying, “These studies, that you did not reveal, like the damage you do to tweens and teen girls, are astounding.” And she said, “Oh, actually, they’re really bad studies.” They said, “No, they’re really good studies.” But, overall, your response to what’s taking place right now?

JESSICA GONZÁLEZ: Listen, Amy, good morning. I’m not surprised that Facebook knew that it was causing concrete harm to women, to people of color, to society as the whole. I’m not surprised it knew that it was spreading disinformation about the vaccine, the pandemic and other things. What I’m surprised about is just how blatantly they lied to the American public, including members of Congress, over and over again, about the extent to which they were causing harm here in the United States and around the world. That’s the shocking part here, is just the number of bald-faced lies repeated over and over again. It indicates clearly that Facebook is unfit to self-govern and that we need the U.S. Congress and the administration to step in and provide transparency and accountability.

JUAN GONZÁLEZ: And, Jessica, I wanted to ask you — in terms of Facebook’s record and its ability to monitor hate speech, you’ve also raised questions about the fact that it’s not just in the English language, given the enormous international reach of Facebook, in other languages, as well. Have you been noticing a difference between how it even monitors English-language material versus those in other languages across the country, given, for instance, the enormous impact that right-wing groups from Latin America had through WhatsApp and other Facebook posts during the election to reach Spanish-speaking people here in the U.S.?

JESSICA GONZÁLEZ: Yes, Juan, as bad as this situation is in English, it’s far worse in Spanish and other non-English languages. That’s why we worked together with partners from the Center for American Progress and National Hispanic Media Coalition to launch the Ya Basta Facebook campaign — “Enough Already, Facebook.” We were calling for concrete steps, investment in content moderation and AI systems, in the personnel needed to accurately and adequately monitor non-English content on Facebook. And we really got a complete blowoff from Facebook. In fact, Facebook wouldn’t even tell us who’s in charge of Spanish-language content moderation, how many moderators do they have, where are those people located, what are the systems and trainings in place. They won’t even provide baseline transparency.

Well, come to find out, part of what Frances Haugen helped reveal this week is that Facebook is not adequately invested in any language, besides perhaps English and French. They’ve made some investments in those areas, but they’ve utterly failed to make the investments necessary to make Facebook safe in other languages.

And I have to tell you, Juan, you know, if it were me and I was running Facebook and I hear from the United Nations that I played a contributing role in the genocide in Myanmar, I would have looked right away at this issue, at whether our moderation systems were keeping people safe, particularly around the globe. And so, the fact that that didn’t happen is appalling and unacceptable.

JUAN GONZÁLEZ: I’d like to bring in Roger McNamee to the conversation. Welcome to Democracy Now! And could you talk to us about your reaction to the latest revelations from Frances Haugen, and how you initially began to believe that Facebook was on a negative and dangerous course?

ROGER McNAMEE: Well, it’s a great honor to be back on the show with you all today. And I want to tip my hat to my friend Jessica, because I think she framed many of the problems exactly correctly. I also want to throw out a huge, you know, thanks to Frances Haugen. She is so courageous, so authoritative and so utterly convincing. I mean, this is a person who was in a position of enormous responsibility at Facebook, who is very technically competent, who saw these problems and had the courage to bring documents out to make sure the whole world knows. That level of courage is just — I mean, we should be all applauding it.

You know, my own experience, I was an adviser to Mark in the early days of Facebook. I mean, he was 22 when we met, and I advised him when the company — starting before it even had a newsfeed. My concerns became an issue for me in early 2016, and I reached out to Mark Zuckerberg and Sheryl Sandberg just before the election in 2016 to warn them, because I was afraid. I had seen these things going on that really bothered me, and it made me fear that the culture of Facebook, the business model and the algorithms were allowing bad people to harm innocent people in the context of civil rights, which is what Jess was just talking about, but also democracy. And, you know, I pleaded with him, because I said, you know, “You’re in a business that depends on trust. You have to get this right.” And I spent months trying to convince Facebook to do the right thing, starting before the election in 2016 and then continuing for several months after. That didn’t work, so I became an activist.

The key thing about Frances Haugen, and the reason that she has changed the game more in three weeks than I could in five years, is because she brought out documents that established definitively that the executives at Facebook were aware of the problems, and that in spite of warnings on things like COVID disinformation, on things like the insurrection, on things like the damage to teenage girls, they persisted.

And they persisted because of a business model that’s not just at Facebook. We can’t just let this be about Facebook, because the business model of surveillance capitalism, which is a concept that was coined by the professor Shoshana Zuboff at Harvard, this is a business model of using surveillance to gather data, in every possible context, and using that to both predict our behavior and to manipulate our choices and our behavior. And that is so fundamentally un-American, so fundamentally unethical, that we have to ban it. It’s as unethical as child labor. And it’s something being adopted by companies throughout the economy. Google invented it. Facebook adopted it. Amazon uses it. Microsoft, many other tech companies have become the leaders in it. But you see it in cars now and in smart devices. You see it all over the economy. And it’s incredibly dangerous.

AMY GOODMAN: You talked about the bravery of Frances Haugen. In fact, she is afraid, and that makes her even more brave. She’s taking on a trillion-dollar company.

ROGER McNAMEE: Yes.

AMY GOODMAN: Physically afraid what kind of retaliation Facebook could wage against her. Do you have thoughts on that, Roger?

ROGER McNAMEE: I think her strategy of maximum public attention is exactly right. I mean, let’s face it: The people who organized this communications strategy have done a brilliant job. And I really tip my hat to them, and I tip my hat to her. And I think her best defense is to be so visible that Facebook wouldn’t dare. You know, their past strategy has been to use ad hominem and to, essentially, invent stories. You remember the famous one about George Soros after he gave a speech at the Davos conference criticizing Facebook. You know, they hired a negative research company to invent an antisemitic story about George Soros and spread it through the press. That has been their past behavior.

I mean, listen, I don’t think these people are criminals, at least not in the way they think about the world. But I do believe they have a very different value system and that their value system, which is about efficiency and speed and growth and profit and power, is in conflict with democracy, and it’s in conflict with our right to make our own choices. And the country has to make a decision: Are we going to allow corporations to essentially replace the government as the people who control our lives, or are we going to recognize that the government is us and that it can, in fact, represent us, as long as we insist that it do so? And that’s what we have to do with Congress today. Frances is going in there to testify. And Congress will string this out, if we let them. And what we need to do is to say to them, “I’m sorry. It’s time to have safety laws, it’s time to have privacy laws, and it’s time to have new antitrust laws.”

AMY GOODMAN: I want to play a few clips, for one sec, Juan, just to give people a sense of where Congress is going. In this Senate hearing, Antigone Davis, the global head of safety of Facebook, faced questions on its internal research on young children and their use of the app. She was questioned by Connecticut Senator Richard Blumenthal, chair of the Subcommittee on Consumer Protection.

SEN. RICHARD BLUMENTHAL: I don’t understand, Ms. Davis, how you can deny that Instagram isn’t exploiting young users for its own profits.

ANTIGONE DAVIS: As someone who was a teenage girl herself and as someone who’s taught middle school and teenage girls, I’ve seen firsthand the troubling intersection between the pressure to — to be perfect, between body image and finding your identity at that age. And I think what’s been lost in this report is that, in fact, with this research, we found that more teen girls actually find Instagram helpful — teen girls who are suffering from these issues find Instagram helpful than not. Now, that doesn’t mean that the ones that aren’t aren’t important to us. In fact, that’s why we do this research. It’s leading to —

SEN. RICHARD BLUMENTHAL: Well, if I may interrupt you, Ms. Davis?

ANTIGONE DAVIS: — product changes and the ability — mm-hmm?

SEN. RICHARD BLUMENTHAL: These are your own reports. These findings are from your own studies and your own experts. You can speak from your own experience, but will you disclose all of the reports, all of the findings? Will you commit to full disclosure?

ANTIGONE DAVIS: I know that we have released a number of the reports. And we are looking to find ways to release more of this research. I want to be clear that this research is not a bombshell. It’s not causal research. It’s, in fact, just directional research that we use for product changes.

SEN. RICHARD BLUMENTHAL: Well, I beg to differ with you, Ms. Davis. This research is a bombshell. It is powerful, gripping, riveting evidence that Facebook knows of the harmful effects of its site on children and that it has concealed those facts and findings.

AMY GOODMAN: Facebook recently postponed the launch of its new Instagram app for kids under 18, once Haugen released these documents. During the Senate hearing last week, Massachusetts Senator Ed Markey questioned the global head of safety of Facebook, Antigone Davis, on the platform’s potential harm to children.

SEN. ED MARKEY: Will you stop launching — will you promise not to launch a site that includes features such as like buttons and follower counts that allow children to quantify popularity? That’s a yes or a no.

ANTIGONE DAVIS: Those are the kinds of features that we will be talking about with our experts, trying to understand, in fact, what is most age appropriate and what isn’t age appropriate. And we will discuss those features with them, of course.

SEN. ED MARKEY: Well, let me just say this. We’re talking about 12-year-olds. We’re talking about 9-year-olds. If you need to do more research on this, you should fire all the people who you’ve paid to do your research up until now, because this is pretty obvious. And it’s pretty obvious to every mother and father in our country, because all recent scientific studies by child development experts found that not getting enough likes on social media significantly reduces adolescents’ feelings of self-worth.

AMY GOODMAN: So, that’s it from the Senate hearing, but I want to end in these series of clips with Frances Haugen herself, the interview on 60 Minutes, when the former Facebook product manager explains how the social media’s algorithm works, and describes how the documents she leaked lay out Facebook’s decision to dissolve its Civic Integrity unit after the 2020 election and before the January 6th Capitol insurrection. She’s speaking with CBS’s Scott Pelley.

FRANCES HAUGEN: And one of the consequences of how Facebook is picking out that content today is that it is optimizing for content that gets engagement or reaction. But its own research is showing that content that is hateful, that is divisive, that is polarizing — it’s easier to inspire people to anger than it is to other emotions.

SCOTT PELLEY: Misinformation, angry content —

FRANCES HAUGEN: Yeah.

SCOTT PELLEY: — is enticing to people and —

FRANCES HAUGEN: It’s very enticing.

SCOTT PELLEY: — keeps them on the platform.

FRANCES HAUGEN: Yes. Facebook has realized that if they change the algorithm to be safer, people will spend less time on the site. They’ll click on less ads. They’ll make less money.

SCOTT PELLEY: Haugen says Facebook understood the danger to the 2020 election, so it turned on safety systems to reduce misinformation.

FRANCES HAUGEN: Too dangerous.

SCOTT PELLEY: But many of those changes, she says, were temporary.

FRANCES HAUGEN: And as soon as the election was over, they turned them back off, or they changed the settings back to what they were before, to prioritize growth over safety. And that really feels like a betrayal of democracy to me.

AMY GOODMAN: So, that’s Frances Haugen, who’s testifying today on Capitol Hill, on CBS’s 60 Minutes. Jessica González, you are the CEO of Free Press, and you’re co-founder of the Change the Terms coalition, which works to disrupt online hate. This key point, whether we’re talking about out tween and teen girls or whether we’re talking about the insurrection, right before the insurrection, they knew the level of hate that was mounting and being amplified, and they turned off the monitors and the disruptors of that.

JESSICA GONZÁLEZ: That’s right. That’s right, Amy. You know, and not only did they know, the fix is so easy. They have a setting. They decided to turn it off before the election results were certified, despite spotting trends of fake information about a supposed steal of the election, despite seeing the activity budding on their site, despite seeing that there were militia groups organizing calls to arms on different parts of their networks. So this was a choice to put profits over public safety, over democracy, over the health and well-being of not just Facebook users but all of us. And this is why I don’t believe that Facebook is fit to govern itself. We need to step in.

And, you know, here’s what’s really insidious about how Facebook’s business model operates, which isn’t true of — like, maybe young girls receive negative messaging in magazines or on TV or on radio. But here’s what’s insidious, in particular, about how not just Facebook but other social media platforms earn their revenues. They are collecting and extracting our personal demographic data, the way we’re behaving on the sites, and they’re identifying what we might be vulnerable to — right? — our vulnerabilities, our predispositions, our behaviors. And they’re actually targeting us with things that they think we’ll engage more with. So this is targeted disinformation, targeted hate, targeted imagery that might have caused teen girls to feel worse about themselves, based on the extraction of our data, with — really, without informed consent. I don’t know that most people understand that we’re the product on Facebook. They’re selling us to their advertisers. And so, without even truly understanding how this works, we’re being used, and without any public interest good — in fact, a whole lot of harm. So we have to completely rethink the structures that underpin social media platforms. And we need to pass legislation, we need FTC investigations, to completely disrupt the hate- and lie-for-profit business model that is doing so much damage.

JUAN GONZÁLEZ: And, Roger McNamee, I wanted to ask you: What is the difference between what Facebook is doing now and what the old legacy media companies had done in the past? After all, those of us who came up in the old legacy media understood that the — the maxim, “If it bleeds, it leads,” that conflict and fear and division are what sell in media. To what degree is Facebook only magnifying the trends that have always existed in commercial media in the United States? Although, of course, at different times there were efforts to curb those media — the breakup of the Associated Press monopoly back in 1945 by the Supreme Court. There have been efforts — the breakup of the original NBC network because it had too much control over radio in America. Do you think it’s time to break up Facebook?

ROGER McNAMEE: So, Juan, really important question. So, Jessica talked about how this works at the individual level. Let’s think about how traditional media worked. It was a broadcast model, so one message for everyone. What internet platforms have done, and what surveillance capitalism does, broadly, so throughout the economy, is that it targets us individually.

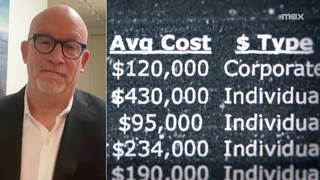

There is a giant marketplace in the United States for data. So, we call this third-party data. So, companies gather data in one place and then sell it to other people in other places for other kinds of uses, and they make additional money from doing that. So, everything that you do that touches something digital — so, if you travel, if you do a financial transaction, use a credit card, your home loan, whatever — anything you do on your phone, including your real-time location, anything you do in an app, anything that you do online, all of this is captured, and it’s all available in a marketplace.

So, these companies can create a digital model of you. And they do this for everybody, whether you’re on internet platforms or not. They have everybody in the system. They create the digital model, and they use that model to make predictions. Now, you can sell the predictions to an advertiser, or you can use them in other ways. For example, they’re used in the banking industry in order to give out mortgages. Police departments use it for predictive policing. And what have we learned? Those two things are based on artificial intelligence. They train artificial intelligence with historical data. The historical data is filled with biases. So you wind up moving to a world of digital redlining for mortgages, so that Black people can only live in certain communities; they can’t live wherever they want; they have different mortgage rates, different housing prices, because of this unfair stuff from the past. And you get things like predictive policing, which overpolice Brown and Black communities because, again, of historical biases that are built into these systems.

So, if you think about this, the manifest unfairness of it is magnified by a corporate culture that says the only people that matter are shareholders. And if you think about it, optimizing for shareholder value is like — it’s the equivalent of saying, “I’m just following orders.” It forgives all manner of sins. And when Frances Haugen was talking about the moral crisis of CEOs who maximize profits instead of the public good, one of the challenges here is that, as a country, we have accepted this notion that corporations should only worry about shareholder value.

That has to change. That is one of the things that Congress needs to do right now. They’ve done enough studies. We have done enough hearings. We now need to have something that looks like the Food and Drug Administration which requires that every technology product demonstrate that it is safe before it is allowed to go into the market. And that should apply to products that are in the market today. We need to have rules that say, “I’m sorry, but there should be no third-party marketplace for location data, health data, anything related to your usage of apps or the internet, maybe even financial data — things that are so intimate that they allow people to manipulate your choices.” And then we need to have new laws on antitrust, so that we can fight against entrenched corporate power.

AMY GOODMAN: Well, I want to thank you both so much. How well does Facebook know you? After 10 likes, better than colleagues. After 70 likes, better than friends. After 150 likes, better than family. And after 300 likes, better than your partner or spouse. Roger McNamee, author of Zucked: Waking Up to the Facebook Catastrophe, and Jessica González, co-CEO of Free Press, member of the Real Facebook Oversight Board and co-founder of Change the Terms, which works to disrupt online hate.

Next up, we look at the revelations in the Pandora Papers about the offshore financial dealings of the world’s richest and most powerful people, and the connections between offshore banking and colonialism. Back in 30 seconds.

Media Options