Topics

Guests

- Roger McNameeauthor and early mentor to Facebook founder Mark Zuckerberg.

- Jessica Gonzálezco-CEO of the media advocacy organization Free Press.

Facebook whistleblower Frances Haugen testified to Congress Tuesday, denouncing the company for prioritizing “astronomical profits” over the safety of billions of users, and urging lawmakers to enact strict oversight over Facebook. Haugen’s testimony gave a rare glimpse into the secretive tech company, which she accused of harming children, sowing division by boosting hateful content, and undermining democracy. “Facebook wants you to believe that the problems we’re talking about are unsolvable. They want you to believe in false choices,” Haugen said at the hearing. Roger McNamee, a former mentor to Mark Zuckerberg, says a U.S. business culture “where CEOs are told to prioritize shareholder value at all cost” is partly to blame for Facebook’s design. “We have abdicated too much power to corporations. We have essentially said we’re not going to regulate them.” We also speak with tech reform activist Jessica González, who says Haugen’s testimony has exposed how little Facebook regulates its platform outside the English-speaking world. “Facebook has not adequately invested to keep people safe across languages,” says González. “There is a very racist element to the lack of investment.”

Transcript

AMY GOODMAN: We begin today’s show with the devastating testimony of Facebook whistleblower Frances Haugen. She testified for over three hours Tuesday during a Senate hearing about how — bombshell disclosures about the social media giant.

FRANCES HAUGEN: My name is Frances Haugen. I used to work at Facebook. I joined Facebook because I think Facebook has the potential to bring out the best in us. But I’m here today because I believe Facebook’s products harm children, stoke division and weaken our democracy. The company’s leadership knows how to make Facebook and Instagram safer but won’t make the necessary changes because they have put their astronomical profits before people. Congressional action is needed. They won’t solve this crisis without your help.

Yesterday, we saw Facebook get taken off the internet. I don’t know why it went down, but I know that for more than five hours, Facebook wasn’t used to deepen divides, destabilize democracies and make young girls and women feel bad about their bodies. It also means that millions of small businesses weren’t able to reach potential customers, and countless photos of new babies weren’t joyously celebrated by family and friends around the world.

I believe in the potential of Facebook. We can have social media we enjoy, that connects us without tearing apart our democracy, putting our children in danger and sowing ethnic violence around the world. We can do better. …

During my time at Facebook, first working as the lead product manager for civic misinformation and later on counterespionage, I saw Facebook repeatedly encounter conflicts between its own profits and our safety. Facebook consistently resolved these conflicts in favor of its own profits. The result has been more division, more harm, more lies, more threats and more combat. In some cases, this dangerous online talk has led to actual violence that harms and even kills people. This is not simply a matter of certain social media users being angry or unstable, or about one side being radicalized against the other. It is about Facebook choosing to grow at all costs, becoming an almost trillion-dollar company by buying its profits with our safety.

AMY GOODMAN: Facebook whistleblower Frances Haugen went on to urge lawmakers to take action against Facebook.

FRANCES HAUGEN: Facebook wants you to believe that the problems we’re talking about are unsolvable. They want you to believe in false choices. They want you to believe that you must choose between a Facebook full of divisive and extreme content or losing one of the most important values our country was founded upon, free speech; that you must choose between public oversight of Facebook’s choices and your personal privacy; that to be able to share fun photos of your kids with old friends, you must also be inundated with anger-driven virality. They want you to believe that this is just part of the deal.

I am here today to tell you that’s not true. These problems are solvable. A safer, free speech-respecting, more enjoyable social media is possible. But if there is one thing that I hope everyone takes away from these disclosures, it is that Facebook can change but is clearly not going to do so on its own. My fear is that without action, divisive and extremist behaviors we see today are only the beginning. What we saw in Myanmar and are now seeing in Ethiopia are only the opening chapters of a story so terrifying no one wants to read the end of it.

AMY GOODMAN: At Tuesday’s hearing, the Senate subcommittee chair, Democratic Connecticut Senator Richard Blumenthal, compared Facebook to Big Tobacco.

SEN. RICHARD BLUMENTHAL: And Big Tech now faces that Big Tobacco jaw-dropping moment of truth. It is documented proof that Facebook knows its products can be addictive and toxic to children. … Our children are the ones who are victims. Teens today looking at themselves in the mirror feel doubt and insecurity. Mark Zuckerberg ought to be looking at himself in the mirror today. And yet, rather than taking responsibility and showing leadership, Mr. Zuckerberg is going sailing. His new modus operandi? No apologies, no admission, no action, nothing to see here. Mark Zuckerberg, you need to come before this committee. You need to explain to Frances Haugen, to us, to the world and to the parents of America what you are doing and why you did it.

AMY GOODMAN: Hours after Facebook whistleblower Frances Haugen testified, Facebook CEO Mark Zuckerberg responded in a message posted on Facebook, writing, quote, “At the heart of these accusations is this idea that we prioritize profit over safety and well-being. That’s just not true.” Zuckerberg went on to write, “The argument that we deliberately push content that makes people angry for profit is deeply illogical. We make money from ads, and advertisers consistently tell us they don’t want their ads next to harmful or angry content,” he said.

To talk more about Facebook, we’re joined by two guests. In San Francisco, Roger McNamee is back with us, early investor in Facebook, mentor to the CEO, Mark Zuckerberg, then went on to write the book Zucked: Waking Up to the Facebook Catastrophe. And in Los Angeles, we’re joined by Jessica González, co-CEO of the media advocacy group Free Press and co-founder of Change the Terms, a coalition that works to disrupt hate online. She’s also a member of the Real Facebook Oversight Board. For those of you who watched Democracy Now! yesterday, these were our two guests leading up to the hearing. And we thought we’d have you back to see what you were most affected by, what were you most surprised by.

Roger McNamee, let’s begin with you today. You know Mark Zuckerberg well. You were his mentor, early investor in Facebook. Talk about how significant this testimony is. And can you explain one thing: What is this issue of the algorithm? How does it give the lie to what Zuckerberg said: “We’re not trying to increase hate and anger”?

ROGER McNAMEE: So, the thing here is there are two basic problems that we’re dealing with. One is the culture of American business, where CEOs are told to prioritize shareholder value at all costs. And it’s a little bit like the excuse “I’m just following orders,” right? That it absolves, essentially, all manner of sins. And that’s a big part of the problem at Facebook.

Essentially, think about the business this way. Advertising is the core of their economy. They get that through attention. And Facebook created a global network where people share things with their intimate friends. And what happened was, Facebook was the first medium on Earth to get access to what I call the inner self, the characteristics of people they would normally only disclose to their most intimate partners, friends, family. And in marketing, that stuff is gold. And the thing is, it’s not just valuable to traditional marketers. It’s incredibly valuable to scammers and people who are doing things that would otherwise be illegal. And if you think about what Facebook did, by connecting the whole world, it brought the world of scams into the mainstream.

So, when Mark says something like, “Well, you know, our advertisers consistently tell us they don’t want to be by hostile content,” the problem with that is that some of their biggest, most important advertisers are the actual people who spread dangerous content. So, if you think about “Stop the Steal,” that was an advertising campaign. If you think about anti-vax, those people are advertisers.

And so, the issue here for Facebook is they’ve created this network that is essentially an unpatrolled commercial place that preys on people’s emotions, because the best way to get people’s attention is to trigger fear or outrage. And so, the algorithms don’t sit there going, “I’m looking for fear or outrage.” What they do is they’re looking for things that get you to react. And it’s simply a fact of human nature, of human psychology, that fear and outrage are the most effective way to do that.

And that’s why Frances Haugen’s testimony is so devastating, because she is an expert in algorithm design. She is completely credible on this issue. And the stuff that she shared was not stuff that was her opinion. It was research created by the best people at Facebook at the direction of Facebook’s management. And so, when Facebook comes out afterward saying she only worked there for two years and she wasn’t in any of the meetings, none of that is relevant, and it’s sort of classic deflection by Facebook. And I would argue that Facebook’s responses yesterday really built Frances Haugen’s credibility, because if you sat there after that hearing, just ask yourself: Who did you find more credible?

JUAN GONZÁLEZ: Well, but, Roger, I wanted to ask you — one of the interesting aspects of the hearing was that both Democrats and Republicans on the Senate committee were equally hostile and skeptical in terms of the role of Facebook. But excuse me for being somewhat skeptical about the potential for real action here, because, I mean, on the one hand, there would be the alternative of actually breaking up Facebook, breaking up this huge Goliath, or even deeper reforms that address what you raise, which is the issue that Shoshana Zuboff has so brilliantly documented of the commodification of the self by the digital giant companies of our day, which Facebook is only one of them.

ROGER McNAMEE: Yeah.

JUAN GONZÁLEZ: So, what direction do you see potentially going in Congress to address what’s been revealed here?

ROGER McNAMEE: So, I think skepticism about Congress is still appropriate. But I will tell you, as somebody who works with Congress all the time, I took great joy in watching the hearing yesterday to see a Republican senator reach out to the chairman and suggest that the two of them co-sponsor a new piece of legislation to address one of the issues that came up, and then a second time a Republican talking about the national security aspect that Ms. Haugen talked about. And the idea of people on live television coming together, that is not something we’ve seen from Congress in a long time. And I agree with you: There are a lot of reasons to be skeptical.

But my perspective on this is, if I could get into that room with them, I’d say, “Listen, Facebook is the poster child for what’s wrong today. But the real problem is that in the United States we have abdicated too much power to corporations. We’ve essentially said we’re not going to regulate them, we’re not going to supervise what they’re doing. And in the process, we’ve allowed power to accumulate in a highly concentrated way, which is bad for democracy.”

But, worse than that, we’ve allowed business models, and, as you just described, surveillance capitalism, this notion of using surveillance to gather every piece of data possible about a person, the construction of models that allow you to predict their behavior, and then recommendation engines that allow you to manipulate their behavior — that that business model, which began with Google, spread to Facebook, Amazon and Microsoft, is now being adopted throughout the economy. You cannot do a transaction anywhere in the economy without people collecting data, which they then buy and sell in a third-party marketplace. And that is, in my opinion — and I think if you ask Shoshana Zuboff, she would agree with this — that that is as immoral as child labor.

And if I could sit these members of the Senate down, I’d say, “Listen, guys, you’re mad at Facebook today, but the way to solve the problem for kids, the way to solve the problem for democracy, the way to solve all of these problems” — and Jessica, I’m sure, is going to talk about the civil rights aspects of this, because they are humongous — “but the way to do that is to end surveillance capitalism, because if we can’t protect the rights of individuals — if you will, our human autonomy — what do we have?”

JUAN GONZÁLEZ: Well, I wanted to bring Jessica in to get her reaction to the hearing, but also just a few figures that I’ve dug up in terms of Facebook users: in the United States, about 200 million Facebook users; in Latin America, 280 million. More people in Latin America are using Facebook than in the United States itself. Haugen mentioned in her testimony what would happen in Myanmar and Ethiopia, the ethnic cleansing situations there that were powered, in some degree, by Facebook. Ethiopia only has 6 million Facebook followers, 6% of their population; Myanmar, 40% of their population. So, what did you find from the hearings, what Haugen mentioned, about this whole issue of how Facebook deals with integrity across languages and nations?

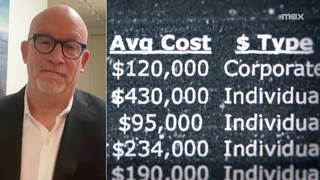

JESSICA GONZÁLEZ: Yeah, as someone who’s worked quite a bit on this issue through the Ya Basta Facebook campaign, where we’re calling out the disparities in how Facebook is enforcing its rules in English and Spanish, I was very interested in the revelation that 87% of Facebook’s investment in what they call integrity, the integrity of their systems, is devoted to English, despite that only 9% of Facebook users are using and speaking English on the platform. Another thing that Haugen revealed is that Facebook seems to invest a lot more in users who make more money, even though the risks to users are not necessarily distributed based on income. And of course they’re not, right? We know that in society and in our digital world, in particular, that women, people of color, religious minorities, ethnic minorities are often disproportionately targeted with bigotry. And so, it was very interesting to hear her reflect and share the internal information that reveals that Facebook has not adequately invested to keep people safe across languages.

So it should come as no surprise then that they played a contributing role in the genocide in Myanmar, that they are responsible for the rise in authoritarianism in the Philippines, in Brazil, in India and Ethiopia, because they have not implemented the type of mechanisms, they have not invested in the human personnel, that is required to conduct content moderation. And so, when I take that, coupled with Facebook’s incredibly disappointing and disrespectful response, and I layer on top of that how time after time Facebook has refused to offer any transparency whatsoever pertaining to the most basic questions about how they moderate content across languages in non-English languages, I’m horrified, I’m disgusted, and there’s a very racist element to the lack of investment across language. And I think that needs to be addressed right away.

You know, going back to Roger’s point about the need to tamp down on surveillance capitalism, I think that’s exactly right. And another thing that I’ve thought was really important that Haugen said is that we actually need to think through new regulatory frameworks. And I think the time is now for Congress to pass a data privacy and civil rights law that limits the collection of our personal data, that limits algorithmic discrimination, that provides greater transparency about what the companies are doing and what they know about the harms they’re causing across the globe, and that really provides accountability to not just the American public but people across the world.

So I think Congress needs to act. I was also encouraged by the real, legitimate questions that came from both sides of the aisle. This was much less of a circus than other hearings we’ve seen about these issues. It does seem like Congress is getting much more serious about this. I hope they act. I think your skepticism is totally valid. And I want to lift up here that the Federal Trade Commission has the authority to look into this more deeply right away. And that should be imperative. That should happen right away. And the Federal Trade Commission needs the budget and the investment to go ahead and start investigating this right now.

AMY GOODMAN: Jessica González, I wanted to go to Senator Amy Klobuchar questioning Frances Haugen about how Facebook’s algorithms may exacerbate the vulnerabilities of young girls with anorexia.

SEN. AMY KLOBUCHAR: I’m concerned that this algorithms that they have pushes outrageous content promoting anorexia and the like. I know it’s personal to you. Do you think that their algorithms push some of this content to young girls?

FRANCES HAUGEN: Facebook knows that their — the engagement-based ranking, the way that they pick the content in Instagram for young users, for all users, amplifies preferences. And they have done something called a proactive incident response, where they take things that they’ve heard — for example, like “Can you be led by the algorithms to anorexia content?” — and they have literally recreated that experiment themselves and confirmed, yes, this happens to people. So Facebook knows that they are leading young users to anorexia content.

AMY GOODMAN: Senator Klobuchar also questioned Frances Haugen about the role of Facebook in the January 6th attack on the Capitol.

SEN. AMY KLOBUCHAR: We’ve seen the same kind of content in the political world. You brought up other countries and what’s been happening there. On 60 Minutes, you said that Facebook implemented safeguards to reduce misinformation ahead of the 2020 election but turned off those safeguards right after the election. And you know that the insurrection occurred January 6. Do you think that Facebook turned off the safeguards because they were costing the company money, because it was reducing profits?

FRANCES HAUGEN: Facebook has been emphasizing a false choice. They’ve said the safeguards that were in place before the election implicated free speech. The choices that were happening on the platform were really about how reactive and twitchy was the platform, right? Like, how viral was the platform? And Facebook changed those safety defaults in the run-up to the election because they knew they were dangerous. And because they wanted that growth back, they wanted the acceleration of the platform back after the election, they returned to their original defaults. And the fact that they had to “break the glass” on January 6th and turn them back on, I think that’s deeply problematic.

AMY GOODMAN: So, they’ve kept their algorithms secret about how this works, but fully well know, since they tamped down before the election, Jessica González, on the reshares. You’re a specialist in hate online. What has to be done in this case?

JESSICA GONZÁLEZ: I mean, listen, they not only need to turn these safety protocols back on, they need to update their policies, but they really need to invest in the human personnel necessary to moderate hate. Another thing that Haugen said yesterday is that even with the best AI systems, those systems are going to only catch 10 to 20% of hate speech. We need more humans across languages to protect people, to protect the integrity and to tamp down on lies, bigotry, authoritarianism and violence that’s budding on Facebook.

The other thing we’ve been asking them to do for years now is to ban white supremacists and conspiracy theorists. And Haugen underscored yesterday that the same people who are spreading hate are prone to spreading lies. Facebook knows who these people are. They have decided to leave them up. There’s a pattern of abuse here that Facebook has overlooked. And what Haugen has shown us is that Facebook has had actual knowledge and has extensively covered up the serial — in a serial way, the global harms that it’s causing. And it needs to correct course immediately.

JUAN GONZÁLEZ: And, Jessica, I just wanted to ask you one — we only have a few more seconds, but if you could comment on the allegations by Facebook that it catches 90% of the hate speech?

JESSICA GONZÁLEZ: Yeah, they’ve said this over and over again. Yet Haugen and the documents that Facebook’s own research inform indicate they’re only catching 3 to 5%. So, I actually looked. Mark Zuckerberg has told this number to me personally. He has said this time and time again before Congress. And, you know, I saw the SEC documents that Haugen filed yesterday. He’s also shared this with shareholders. And so, this really needs to be looked into. They’re materially misleading the public and shareholders about the nature and the quality of their content moderation in regards to hate speech.

AMY GOODMAN: Jessica González, co-CEO of Free Press, member of the Real Facebook Oversight Board, co-founder of Change the Terms, which works to disrupt hate online. Roger McNamee, author of Zucked: Waking Up to the Facebook Catastrophe, early investor in Facebook, mentor to Mark Zuckerberg.

Coming up, we go to California to look at this weekend’s devastating oil spill and the calls to ban offshore drilling. Stay with us.

Media Options