Guests

- Anjan Sundaramaward-winning journalist and author.

- David Wallace-Wellsauthor and New York Times opinion writer.

We look at artificial intelligence with two guests who have written extensively about the topic. Award-winning journalist Anjan Sundaram hosted the television miniseries Coded World for Channel NewsAsia, Singapore’s national broadcaster, on how artificial intelligence is changing humans. David Wallace-Wells is a New York Times opinion writer who wrote a piece headlined “A.I. Is Being Built by People Who Think It Might Destroy Us.”

Transcript

AMY GOODMAN: This is Democracy Now!, democracynow.org, The War and Peace Report. I’m Amy Goodman, with Nermeen Shaikh.

Today we look at artificial intelligence with two guests who have written extensively about the topic. Anjan Sundaram is still with us. He hosted a television miniseries titled Coded World for Channel NewsAsia, Singapore’s national broadcaster, on how artificial intelligence is changing humans.

ANJAN SUNDARAM: What is an algorithm, and how does it affect people’s lives? I’m Anjan Sundaram, journalist and mathematician. I want to uncover the truth behind this code that is everywhere — and seems to be changing everything. What are you?

QUEEN PUPPET: I am my computational knowledge engine.

ANJAN SUNDARAM: Algorithms are making important choices for us, even when we don’t realize it.

ZEYNEP TUFEKCI: They’re trying to change your mind on politics.

ANJAN SUNDARAM: Algorithms are impacting our freedom. Computer systems are now watching us?

GENERAL DOGAN: They make you feel like you’re public enemy number one.

ANJAN SUNDARAM: Algorithms are becoming more human.

ERICA: [translated] You realize that I’m a robot?

SAMER AL MOUBAYED: We’re trying to understand life and break it down into code.

FURHAT ROBOT: What do you want me to do next?

HATSUNE MIKU: [translated] Good afternoon.

AKIHIKO KONDO: [translated] This is my wife.

ANJAN SUNDARAM: Algorithms are changing our future. I can move something with my brain?

TAN LE: Yes.

ANJAN SUNDARAM: My god.

LINUS NEUMANN: Most people don’t know the limitations of an algorithm.

ANJAN SUNDARAM: The coded world is something I can no longer ignore.

AMY GOODMAN: That’s the trailer for the series Coded World by award-winning journalist Anjan Sundaram, who is still with us in Mexico City, along with David Wallace-Wells, New York Times opinion writer, who wrote a piece in March headlined “A.I. Is Being Built by People Who Think It Might Destroy Us.”

We welcome you both to Democracy Now! Anjan, I want to begin with you. For this series you did in Coded World, you traveled from Japan to India to China, across Europe, talking to robots. Explain this series.

ANJAN SUNDARAM: Oh, sure. So, the idea of the series was really, you know, about how mathematics is now so pervasive and sort of infiltrating our lives, you know, and it’s influencing the choices that we’re making. It’s influencing how we think of ourselves, who we think we are. We now have — just as an example, we now have digital versions of ourselves, you know, built by Amazon and Google and major technological companies, existing in the cloud, that are trying to predict — not only replicate who we are, but trying to predict what we’ll want, what we’ll want to purchase, and what they can sell us. And this is part of, as I see it, a larger trend, a trend that has been at play for hundreds — possibly hundreds of years, possibly longer, whereby we try to abstract the world and ourselves through mathematical code.

And I think the — you know, in my personal opinion, the fears of extinction, of where this is taking us, are real and very well founded. It’s striking how David was speaking earlier about, you know, the pollution in New York City. And at the same time, we have Apple marketing and launching a new headset. I think the tech billionaires of the world are leading us to a form of escapism, whereby, you know, some are telling us we’ll go and colonize Mars, some are telling us we’ll go and live in the metaverse, but what they’re really all doing is telling us that we’re going to turn away from many of the major problems that are facing us today in the world, the physical world, that we need to protect, in our environment, ourselves, our neighbors, our families. And it’s far too easy now to think of technology as offering us an easy escape, rather than facing the hard work that we need to do in order to protect our environment. And that was one of the key takeaways that I took, that I came away with, as I traveled across the world meeting people who are building this new technological world and shaping a digital existence for us.

NERMEEN SHAIKH: Anjan, can you talk about — what did you find when you went to China, to Japan, to India about the kind of technology that’s being developed, the differences between the kind of technology, and also if there are, and if so, what kind, regulations in place around artificial intelligence?

ANJAN SUNDARAM: Indeed, and I think there’s a big difference. There is a stark difference. In Asia, you know, there’s a different attitude from the government, also from the people. There’s far less regulation. There’s far less fear of AI. You know, the governments and the people embrace the technology. Asian people whom I met were very willing to give up their private data to governments, saying, you know, they relied and trusted the governments to protect them.

On the other hand, you had the government and companies inventing devices such as the Neuro Cap, which is a headset that factory owners are now placing on employees’ heads to monitor their brainwaves as they’re working, and to ascertain for themselves, you know, whether a worker is tired, whether they’re working efficiently, but also, you know, to monitor their thoughts and whether they’re lying about whether they need a bathroom break. So it’s a very authoritarian vision of the use of technology. On the other way, you also had —

AMY GOODMAN: Wait, wait, wait. Anjan, before you talk about the other hand, can you explain what exactly you just said? Who is doing that?

ANJAN SUNDARAM: So, I was in Ningbo, a city in southern central China, and I was speaking — I visited a factory where a former university professor had developed this — he called it a Neuro Cap, and he called it a revolution in industrial technology, whereby — you know, I sat with some of the workers, I sat with some of the developers of this technology, and I wore the Neuro Cap myself. And I watched as it monitored different frequencies of my own brainwaves, and would tell me — it would tell the employer — of course, it wouldn’t tell the workers themselves, but it would tell the employer, you know, whether the worker had worked too much, worked too little, whether they were focusing on their job, whether they were thinking about something else.

And to the employer, this seemed a revolutionary way, in their words, of monitoring their employees and understanding whether they could be more efficient. In a sense, to me, it was converting and transforming the human employee into something much, much more akin to a robot or an automaton by providing very intimate private information about their thoughts, their very thoughts, their most private thoughts, to employers, who were able to monitor their productivity and determine whether they were good workers or bad workers or whether they actually needed a bathroom break or not. And that’s really happening.

NERMEEN SHAIKH: And, Anjan, apart from that, was there just one factory that you visited where this Neuro Cap was being used, or has it become more widespread? What do you know about this?

ANJAN SUNDARAM: I mean, the idea is to market this technology far more widely. And as I mentioned, there’s very little sense for need for regulation. So, in countries such as China, there’s a far greater sense of embracing technology and its productivity benefits. And so, there was very little — in fact, it was — at one point, when my questions to the employer became somewhat tense, they threatened to throw us out and have us delete our footage, because they had not contemplated — this is what was striking. They had not contemplated that I might ask, you know, suspicious or skeptical questions about this technology, and they thought it was the greatest thing. And suddenly, when we —

AMY GOODMAN: Clearly, their mistake was, you didn’t have a Neuro Cap on when you were doing the interview, or before, for them to predict. But I really have a question here, when you said they can tell your thoughts. This Neuro Cap might be able to tell you are thinking, but not what your thoughts are.

ANJAN SUNDARAM: Not at this point. And indeed, you know, the tech — but it’s quite powerful. In California, a similar technology, a headset that I wore, allowed me to control a drone. I was able to control a drone merely by using my thoughts, you know, to tell it to fly or land or stop or fly at greater speeds. And so, there are frequencies and parts of our, you know, brainwave spectrums that we are not — we’re not aware of, but the sensors can read. And so they can read things about ourselves. The feeling I got from that experience of controlling the drone was that this technology may know parts of me better than I know myself. And so, this is — it’s not a simple, you know, question about — of just merely reading your particular thoughts. It’s actually access to who you are and, you know, what you’re thinking, in areas that you might not be aware of yourself and that we might consider right now subconscious, you know, beyond our awareness.

NERMEEN SHAIKH: That’s truly extraordinary and also quite terrifying. Can you talk about what you learned when you went to Japan, both in terms of the uses, the research that’s going on, the regulations in place, and also in India?

ANJAN SUNDARAM: Sure, yes. One of the most striking conversations I had in Japan was with an AI researcher. And I asked her if we should be afraid of artificial intelligence and where it’s heading. And she said to me, “I think that’s the wrong question, because we are building AI.” And from her perspective, we’re building AI in our own image. And so why should we be afraid of ourselves? And why should we be afraid of knowing who we are? And I thought that was really telling.

In the West, we often see AI as an adversarial technology, whereas in places like Japan, they approach it from, you know, a culture of Buddhism, of just another tool whereby we build out technology in a constructive way in order to know who we are and know ourselves. And one of the clips that you played before, at the beginning of the segment, was of me with a robot, which was — and when I spoke to the creator of that robot, he said very much the same thing: “I’m trying to understand what the human is, by building a technological replica of who we are.”

NERMEEN SHAIKH: Was he the guy who introduced a robot as his wife in the trailer that we played?

ANJAN SUNDARAM: That was quite — that was someone else. Yes, I did meet a Japanese man who — again, this is telling. This is — you know, he had married a hologram. And to him, that piece of code that, you know, underlay the hologram was real enough and human enough for him to consider that code as a life partner. And, you know, when he comes — he was telling me how, when he comes back from work, he’s walking back from work, he texts the code, or he communicates with her, he communicates with the hologram, and tells her, “Hey, I’m coming home,” and she turns on the lights and turns on the heating in his apartment, and, you know, shows him care in that way. And for him, this is sufficient relationship with a piece of code. And we can criticize it. We can, you know, judge it. We can laugh at it. But I think it tells us how real the code is becoming. The code is becoming real enough that people around the world are beginning to see it as substitutes for human relationships, in very real ways.

AMY GOODMAN: We’re talking to Anjan Sundaram, journalist, author, host of the series Coded World. We also have with us David Wallace-Wells, New York Times opinion and science writer, who recently wrote a piece in The New York Times, “A.I. Is Being Built by People Who Think It Might Destroy Us.” If you can elaborate on this, David? But also, I think it’s so important to explain what AI, what artificial intelligence, is, because with so many people who lead extremely busy, hard-working lives, I think the reason it can go in directions that are completely, perhaps, out of control, and maybe dangerous, is because people don’t understand it. And so, when it comes to issues of regulation, leaving it to those who understand it even less, like the U.S. senators and congressmembers in the U.S. or politicians all over the world, is very frightening.

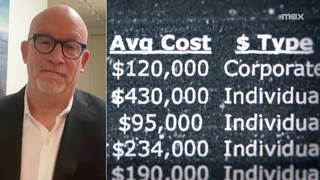

DAVID WALLACE-WELLS: Yeah, I think the recent congressional hearings show this pretty clearly, that we don’t have a kind of workable, clear definition of what we’re talking about, especially when we’re talking about regulation. Are we talking about the algorithms that, you know, help connect Uber drivers to Uber riders? Are we talking about the machine learning technology that has pointed to possible, you know, drug development pathways that hadn’t occurred to research scientists? Or are we talking about, you know, the ChatGPT and other AI chatbots that many Americans have been playing with over the last couple of months, in which we’re given some natural language version of a Google search result? Or are we talking about steps beyond that, that seem pulled out of science fiction, where these intelligent — these artificial intelligences may endeavor to sort of outwit and outmaneuver humans?

Personally, I’m considerably more skeptical that that kind of scenario is possible. And I find it a little bit distressing and disheartening that so many of the people who are talking about these issues at the highest levels, you know, the heads of some of these companies developing these technologies most ambitiously, are so focused on the existential risks of AI. I think the present-tense political risks of technological development through artificial intelligence are much more striking and important to deal with. And I know a number of researchers have been warning about those challenges for a long time and haven’t gotten nearly the audience that those people who think that this may lead to human extinction have gotten. And those issues relate to bias and discrimination. They relate to disinformation and the breakdown of our political systems because of the possible presence of so many sort of bots, you know, performing as humans on our screens in ways that confuse us. And, you know, I think that those issues are considerably more important to wrestle with.

More generally, I think that the challenge before us is to deal with this not as an existential challenge, but as a political challenge. And I think that the first thing that demands of us is to think hard about the interests that lay before tech CEOs in getting before Congress, talking not about the way that their technology may exacerbate inequality and extend bias and prejudice in our societies, but about the possibility of, you know, an artificial takeover of our nuclear arsenal, and what it means that those people are pointing to something that is almost too abstract to regulate, as opposed to the very real risks in the short term, that would, in theory at least, be much more manageable for American and international policymakers to focus on. I think there’s a real problem here. There’s a little bit of a bait and switch, where we’re being asked to imagine a sci-fi future almost as a way of distracting us from the disruptions these technologies will likely cause in our everyday lives in a much more short-term way. And I say that, in part, because I think those short-term challenges are real, but also, in part, because the sci-fi scenario seems much less plausible to me. I think that, to a large degree, many of these CEOs are essentially working from a playbook that they pulled from SF that they read when they were, you know, preteens, and many of the people who are most serious about AI don’t share the same existential worries.

And when I look at the tools that have been unveiled to the public to this point, which is not to say they’re anything like the full scope that we’ll be dealing with 10 or 15 years from now, I see a search engine that makes mistakes all the time. And I don’t see a structural reason why that wouldn’t continue. I see a problem if we roll out large-scale search engines that are so riddled with mistakes, as ChatGPT seems to be at this point, and replace the much more reliable ones that we have, or displace something like Wikipedia, which is a remarkable tribute to collective wisdom. But I don’t know that we should be worrying now in 2023 about, you know, the Terminator scenario or the Matrix scenario. It seems to me much more quotidian, real political struggle that we need to be dealing with. And I wish that we were focusing more on those challenges, as opposed to the sort of theological story that many of these CEOs want to believe they’re living in.

NERMEEN SHAIKH: And, David, if you could respond to those who say that, in fact, artificial intelligence may lead to, for example, the resolving or coming close to resolving, or at least addressing the climate crisis? You’ve expressed, of course, skepticism about this. And also respond to what Anjan said, what he discovered in his travels and research, reporting in Japan and India, but in particular in China, this Neuro Cap monitoring the thoughts of workers in a factory?

DAVID WALLACE-WELLS: Well, I don’t think we should think of that as an exclusively Chinese phenomenon. I think in the U.S., if I’m not mistaken, there are trucking companies that are using eye-tracking devices to make sure that their drivers are focused on the road and not texting. And that may have some safety benefits, but it is also a kind of an eerie and disconcerting step into a much more complete surveillance state than the one that any of us grew up living in. So I don’t think this is something that we can just attribute to Chinese authoritarian culture or a world over there where they’re less — they’re more gung-ho about developing the future, and less skeptical than, say, the average American is.

You know, the question of exactly, you know, where we go from here, and what disarray and what impacts AI may have on us, I think, is — you know, I just would reiterate what I said a few minutes ago. It’s a matter of dealing with the challenges that we’re faced with today. And I think that when we look outside the sky and see, you know, the New York skyline turned orange, think about breathing unbelievably unhealthy air from forest fires in Canada, I’m sorry, I just don’t see a way that artificial intelligence is going to fix that, because the challenge of the climate crisis is not technological. It is not technical.

We have the tools we need today to decarbonize almost all of the systems that we need to decarbonize, something — maybe 70, 80% of our carbon emissions. We have the tools we need today. And by the time we get around to cleaning up the last 20 or 30% two or three decades from now, we will have those tools then, too. We don’t need new innovation, exactly. What we need is political will and political focus to prioritize these challenges and their solutions, rather than treating them as manageable externalities of a much more important trajectory of growth that we’re heading on.

That’s a political question. It’s a political challenge. And I think we need to be dealing with AI in the same way that we deal with the climate crisis, in political terms rather than technological terms. I think we really fall into traps when we imagine that a tech solution will save us, for a number of reasons, including that we’ve been trained over the last decade, through our smartphones, to believe that technological progress is a matter of downloading an app, when in fact, especially when we’re dealing with the climate crisis, we’re talking about building out an entire new global infrastructure, not just around energy, but around housing and around transportation, around agriculture. These are things that we need to build out in the real world. And it’s not nearly as easy as just figuring out a new algorithm. It’s about deciding what to prioritize, how to compensate those people who are suffering as a result of the climate damages that are unfolding already, and how to compensate those who will be displaced by some of these projects. Those, again, are political challenges, not technological ones.

And I find there is something not just escapist about the worldview of tech CEOs who ask us to believe that AI has functionally already solved climate change, as Eric Schmidt has suggested in the past, or that it may help us in significant ways in the decades ahead to solve it, I think that there’s something misleading. I think it’s like a little bit of a bait and switch there, another bait and switch, where we’re asked not to transform the society that we have today in the ways that we know we can, if we had the will, but to simply wait for an algorithm to tell us what to do. We don’t need an algorithm to tell us what to do. We know carbon emissions are at an all-time high. A new report published today said that we need to get to net zero perhaps as soon as 2035 to avoid 1.5 degrees Celsius of warming, which is the stated goal of the Paris accords. There’s not a mystery of how to get there. It’s just that the world is not yet willing to do it. And what we are lacking is that commitment and that focus, not the tools. We have the tools. We’re just not using them.

AMY GOODMAN: David Wallace-Wells, before we move on, I want to go back to a point you made about corporations controlling or monitoring truck drivers by following their eye movements. And I was just wondering if you can explain that. What does that, and what are you talking about? Where have you seen that happening? How does that happen? When you said, you know, “eye movements,” I think people think iPhone, that you’re talking the letter “I” movements. You’re talking about the actual eye movements. So they wear special glasses?

DAVID WALLACE-WELLS: I’m honestly speaking a little bit, like, out of my pay grade. There’s a book and, I believe, a documentary about this that came out from a New America colleague of mine a year or two ago. I believe that the camera is embedded in the rearview mirror. But it’s not all that dissimilar from the way that Amazon monitors workers in its warehouses, making sure that they’re using bathroom breaks at only certain intervals. There is, you know, a growing movement of workplace surveillance in this country, indeed all around the world, as would be expected, given the relative affordability of surveillance mechanisms and the growing reach that they have.

I don’t know that I trust that five or 10 years ago, you know, American companies will be peeking into our brains, in part because I may be a little bit more skeptical about the technology’s ability to read that directly. But I do think that as we enter into a new industrial boom, which is happening in the U.S., and thanks, part, to the Inflation Reduction Act and the efforts to combat climate change, we also have to think about some of the workplace issues that arise from new industrial employment, including that there may be significant more surveillance measures implemented by many of these companies than the average American is comfortable with.

Having said that, I saw a poll the other day that said that something like a third of Gen Z Americans would be comfortable with a total surveillance state within their — even within individual private homes, as a way of preventing, you know, some domestic disputes. And so, I do think that we may be farther along towards accommodating or normalizing some of these measures of surveillance than those of us who are a generation or so older may want to believe.

NERMEEN SHAIKH: Anjan, could you respond to what David has said? And also, I think you didn’t get an opportunity last time to talk about the people that you spoke to in India and across Europe, in fact — oh, and also the U.S.

ANJAN SUNDARAM: Yeah, sure. You know, I think when I see the development of AI, what I see happening is this age-old journey of seeking perfection. And I think that’s where a lot of — seeking perfection through mathematics, and it’s incredibly seductive. And this is why we have so many tech CEOs speaking in sort of religious terms almost. And, you know, artificial intelligence, they seem to promise an end to immortality, and they seem to be seeking — speaking in these terms, which are very seductive, especially in a time where religion, which was our traditional means of dealing with questions of human suffering, death of our loved ones, our own mortality — religion is waning.

And to the question of, you know, whether tech can be a solution to decarbonizing, I think I might point out that, indeed, there are technologies — for example, I pick one, like electrical cars. And many of the rare earth minerals and lithium that we need to power our, like, “clean” batteries rely on mining Indigenous territories and displacing Indigenous peoples. And so, these solutions are — you know, though they might seem seductive and appealing at a high level, at a societal or economic level, international economy, they still don’t change our relationship with the environment, you know, which is to mine it, which is to displace people, which has destroyed the last few pristine ecosystems that we have left, on which many of these rare earth minerals, whether it’s in Chile or Mexico or in China, where these minerals are found.

And yeah, I mean, I’ll just end on a lighter note here. In India — you asked about India and the U.S. and Europe, you know, during — what I found during my travels there. I found, you know, AI also being used for incredible creativity. People were using AI to generate, you know, new forms of music, to collaborate and co-create music and visual graphics for parties. I found AI being used to create companions for the elderly who didn’t have family members left, because we are living longer in many countries than we used to be, and often our family members are gone, or societies also become so nuclear, nuclear families, you know, that people don’t have a sense of community.

And so, I found, you know, artificial intelligence being used for many of these more mundane needs. And maybe it speaks to a sort of dystopic future where we don’t rely on our human connections and we seek technology to replace them. But nonetheless, people seem to find a sense of hope, beauty, perfection and excitement about the future also in these technologies. And that’s why technologists and companies and governments are so — are pursuing them. There is a — it speaks to a fundamental human desire, as well, that we need to acknowledge, if we are to regulate it, if we are to use it in a productive and not-so-damaging way.

AMY GOODMAN: Well, Anjan Sundaram, we want to thank you so much for being with us, journalist, author, and host of the series Coded World, that’s produced by Singapore national broadcaster Channel NewsAsia. And thank you also to David Wallace-Wells, New York Times opinion and science writer, author of The Uninhabitable Earth, recently wrote a piece in The New York Times, “A.I. Is Being Built by People Who Think It Might Destroy Us.” We’ll link to all of your work, both of you. Anjan and David, thank you so much for being with us.

I’m Amy Goodman, with Nermeen Shaikh. To see Part 1 of our discussion, you can go to democracynow.org

Media Options