In a dramatic hearing Tuesday, the CEO of the startup behind ChatGPT warned Congress about the dangers of artificial intelligence — his company’s own product. We discuss how to regulate AI and establish ethical guidelines with Marc Rotenberg, executive director of the Center for AI and Digital Policy. “We don’t have the expertise in government for the rapid technological change that’s now taking place,” says Rotenberg.

Transcript

AMY GOODMAN: This is Democracy Now!, democracynow.org, The War and Peace Report. I’m Amy Goodman, with Nermeen Shaikh.

As more of the public becomes aware of artificial intelligence, or AI, the Senate held a hearing Tuesday on how to regulate it. Senate Judiciary Subcommittee Chair Richard Blumenthal opened the hearing with an AI-generated recording of his own voice, what some call a “deep fake.”

SEN. RICHARD BLUMENTHAL: And now for some introductory remarks.

SEN. RICHARD BLUMENTHAL VOICE CLONE: Too often, we have seen what happens technology outpaces regulation: the unbridled exploitation of personal data, the proliferation of disinformation and the deepening of societal inequalities. We have seen how algorithmic biases can perpetuate discrimination and prejudice, and how the lack of transparency can undermine public trust. This is not the future we want.

SEN. RICHARD BLUMENTHAL: If you were listening from home, you might have thought that voice was mine and the words from me. But, in fact, that voice was not mine, the words were not mine, and the audio was an AI voice-cloning software trained on my floor speeches. The remarks were written by ChatGPT when it was asked how I would open this hearing.

AMY GOODMAN: Google, Microsoft and OpenAI — the startup behind ChatGPT — are some of the companies creating increasingly powerful artificial intelligence technology. OpenAI CEO Sam Altman testified at Tuesday’s hearing and warned about its dangers.

SAM ALTMAN: I think if this technology goes wrong, it can go quite wrong. And we want to be vocal about that. We want to work with the government to prevent that from happening. But we try to be very clear-eyed about what the downside case is and the work that we have to do to mitigate that. … It’s one of my areas of greatest concern, the more general ability of these models to manipulate, to persuade, to provide sort of one-on-one, you know, interactive disinformation. … We are quite concerned about the impact this can have on elections. I think this is an area where, hopefully, the entire industry and the government can work together quickly.

AMY GOODMAN: This all comes as the United States has lagged on regulating AI compared to the European Union and China.

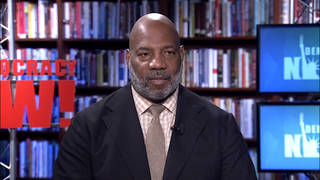

For more, we’re joined by Marc Rotenberg, executive director of the Center for AI and Digital Policy.

Marc, welcome to Democracy Now! It’s great to have you with us. Now, it’s very significant that you have this AI CEO saying, “Please regulate us.” But, in fact, isn’t he doing it because he wants corporations to be involved with the regulation? Talk about that, and also just what AI is, for people who just don’t understand what this is all about.

MARC ROTENBERG: Well, Amy, first of all, thank you for having me on the program.

And secondly, just to take a step back, Sam Altman received a lot of attention this week when he testified in Congress, but I think it’s very important to make clear at the beginning of our discussion that civil society organizations, experts in AI, technology developers have been saying for many, many years there’s a problem here. And I think it’s vitally important at this point in the policy discussion that we recognize that these views have been expressed by people like Timnit Gebru and Stuart Russell and Margaret Mitchell and the president of my own organization, Merve Hickok, who testified in early March before the House Oversight Committee that we simply don’t have the safeguards in place, we don’t have the legal rules, we don’t have the expertise in government for the rapid technological change that’s now taking place. So, while we welcome Mr. Altman’s support for what we hope will be a strong legislation, we do not think he should be the center of attention in this political discussion.

Now, to your point, what is AI about and why is there so much focus? Part of this is about a very rapid change taking place in the technology and in the tech industry that many people simply didn’t see happening as it did. We have known problems with AI for many, many years. We have automated decisions today widely deployed across our country that make decisions about people’s opportunities for education, for credit, for employment, for housing, for probation, even for entering the country. All of this is being done by automated systems that increasingly rely on statistical techniques. And these statistical techniques make decisions about people that are oftentimes opaque and can’t be proven. And so you actually have a situation where big federal agencies and big companies make determinations, and if you went back and said, “Well, like, why was I denied that loan?” or, “Why is my visa application taking so many years?” the organizations themselves don’t have good answers.

So, that was reflected, in part, with Altman’s testimony this week. He is on the frontlines of a new AI technique that’s referred to generally as generative AI. It produces synthetic information. And if I can make a clarification to your opening about Senator Blumenthal’s remarks, those actually were not a recording, which is a very familiar term for us. It’s what we think of when we hear someone’s voice being played back. That was actually synthetically generated by Senator Blumenthal’s prior statements. And that’s where we see the connection to such concepts as deep fakes. This doesn’t exist in reality, but for the fact that an AI system created it.

We have an enormous challenge at this moment to try to regulate this new type of AI, as well as the preexisting systems, that are making decisions about people, oftentimes embedding bias, replicating a lot of the social discrimination in our physical world, now being carried forward in these data sets to our digital world. And we need the legislation that will establish the necessary guardrails.

NERMEEN SHAIKH: Marc Rotenberg, can you elaborate on the fact that so many artificial intelligence researchers themselves are worried about what artificial intelligence can lead to? A recent survey showed that half, 50%, of AI researchers give AI at least a 10% chance of causing human extinction. Could you talk about that?

MARC ROTENBERG: Yes, so, absolutely. And actually, I was, you know, one of the people who signed that letter that was circulated earlier this year. It was a controversial letter, by the way, because it tended to focus on the long-term existential risks, and it included such concerns as losing control over these systems that are now being developed. There’s another group in the AI community that I think very rightly said about the existential concerns that we also need to focus on the immediate concerns. And I spoke, for example, a moment ago about embedded bias and replicating discrimination. That’s happening right now. And that’s a problem that needs to be addressed right now.

Now, my own view, which is not necessarily the view of everyone else, is that both of these groups are sending powerful warnings about where we are. I do believe that the groups that are saying we have a risk of a loss of control, which includes many eminent computer scientists who have won the Turing Award, which is like the Nobel Prize for computer science, I think they’re right. I think there’s a real risk of loss of control. But I also agree with the people, you know, at the AI Now Institute and the Distributed AI Research Institute that we have to solve the problems with the systems that are already deployed.

And this is also the reason that I was, frankly, very happy about the Senate hearing this week. It was a very good hearing. There were very good discussions. I felt that the members of the committee came well prepared. They asked good questions. There was a lot of discussion about concrete proposals, transparency obligations, privacy safeguards, limits on compute and AI capability. And I very much supported what Senator Blumenthal said at the outset. You know, he said, “We need to build in rules for transparency, for accountability, and we need to establish some limits on use.” I thought that was an excellent place to start a discussion in the United States about how to establish safeguards for the use of artificial intelligence.

NERMEEN SHAIKH: And, Marc Rotenberg, what are the benefits that people talk about with respect to artificial intelligence? And given the rate, as you said, at which it’s spreading, these rapid technological advances, is there any way to arrest it at this point?

MARC ROTENBERG: Well, there’s no question that AI, I mean, broadly speaking — and, you know, of course, it is a broad term, and even the experts, of course, don’t even agree precisely on what we’re referring to, but let’s say AI, broadly speaking — you know, is contributing to innovation in the medical field, for example, big breakthroughs with protein folding. It’s contributing to efficiency in administration of organizations, better ways to identify safety flaws in products, in transportation. I think there’s no dispute. I mean, it’s a little bit like talking about fire or electricity. It’s one of these foundational resources in the digital age that is widely deployed. But as with fire or electricity, we understand that to maintain — to obtain the benefits, you know, you also need to put in some safeguards and some limits. And you see we’re actually in a moment right now where the AI techniques are being broadly deployed with hardly any safeguards or limits. And that’s why so many people in the AI community are worried. It’s not that they don’t see the benefits. It’s that they see the risks if we continue down this path.

AMY GOODMAN: Well, we want to talk about what those safeguards need to be and dig further into how it is that artificial intelligence could lead to the extinction of humans on Earth. We’re talking to Marc Rotenberg, the executive director of the Center for AI and Digital Policy. We’ll do Part 2, put it online at democracynow.org. I’m Amy Goodman, with Nermeen Shaikh.

Media Options